7163 lines

4.2 MiB

Plaintext

7163 lines

4.2 MiB

Plaintext

|

|

{

|

|||

|

|

"cells": [

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "90dbf366-7a84-4c76-bcd6-466feaa51a7b",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"# Transformer原理"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "d5eef42a-f6f1-4756-a325-1e7fd59813a5",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"**0 前言**<br>\n",

|

|||

|

|

" 0.1 Transformer模型的地位与发展历程<br>\n",

|

|||

|

|

" 0.2 序列模型的基本思路与根本诉求<br>\n",

|

|||

|

|

"\n",

|

|||

|

|

"**1 注意力机制**<br>\n",

|

|||

|

|

" 1.1 注意力机制的本质<br>\n",

|

|||

|

|

" 1.2 Transformer中的自注意力机制运算流程<br>\n",

|

|||

|

|

" 1.3 Multi-Head Attention 多头注意力机制<br>\n",

|

|||

|

|

"\n",

|

|||

|

|

"**2 Transformer的基本结构**<br>\n",

|

|||

|

|

" 2.1 Embedding层与位置编码技术<br>\n",

|

|||

|

|

" 2.2 Encoder结构解析<br>\n",

|

|||

|

|

" 2.2.1 残差连接<br>\n",

|

|||

|

|

" 2.2.2 Layer Normalization层归一化<br>\n",

|

|||

|

|

" 2.2.3 Feed-Forward Networks前馈网络<br>\n",

|

|||

|

|

" 2.3 Decoder结构解析<br>\n",

|

|||

|

|

" 2.3.1 完整Transformer与Decoder-Only结构的数据流<br>\n",

|

|||

|

|

" 2.3.2 Encoder-Decoder结构中的Decoder<br>\n",

|

|||

|

|

" 2.3.2.1 输入与teacher forcing<br>\n",

|

|||

|

|

" 2.3.2.2 掩码注意力机制<br>\n",

|

|||

|

|

" 2.3.2.3 普通掩码与前馈掩码<br>\n",

|

|||

|

|

" 2.3.2.4 编码器-解码器注意力层<br>\n",

|

|||

|

|

" 2.3.3 Decoder-Only结构中的Decoder<br>"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "db513437-c41a-4817-b69b-1b41be7d9e5e",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"Transformer模型,作为自然语言处理(NLP)领域的一块重要里程碑,于2017年由Google的研究者们提出,现在成为深度学习中对文本和语言数据处理具有根本性影响的架构之一。在NLP的宇宙中,如果说RNN、LSTM等神经网络创造了“序列记忆”的能力,那么Transformer则彻底颠覆了这种“记忆”的处理方式——它放弃了传统的顺序操作,而是通过自注意力机制(Self-Attention),赋予模型一种全新的、并行化的信息理解和处理方式。从自注意力的直观概念出发,Transformer的设计者们引进了多头注意力(Multi-Head Attention)、位置编码(Positional Encoding)等创新元素,大幅度提升了模型处理序列数据的效率和效果。通过精巧的数学构建和模型设计,Transformer能够同时捕捉序列中的局部细节和全局上下文,解决了以往模型在长距离依赖上的困难,使其在处理长文本序列时的能力大大增强。\n",

|

|||

|

|

"\n",

|

|||

|

|

"经过几年的快速迭代,Transformer不仅优化了其原始架构,而且催生了一系列高效的后续模型如BERT、GPT-3、RoBERTa和T5等,这些模型在语言理解、文本生成等多种NLP任务上都取得了令人瞩目的成绩。如同LSTM在其领域内的长久影响一样,Transformer模型的论文和原理也成为了NLP领域的经典,而它本身的算法和架构也已成为当代处理语言数据的根基。今天,尽管存在多种高级的算法和模型,Transformer仍然是处理复杂语言模式、捕捉细腻语义的主流架构,它在多个维度上重塑了我们构建和理解语言模型的方式。现在,就让我们一起来探讨这一划时代结构背后的深邃原理。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "5f7cc248-0d1a-413d-8837-58b55c3ec268",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"## 1 Transformer模型的地位与发展历程"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "b9e56e65-a9d1-4727-bc5c-0b87dfcf5c68",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

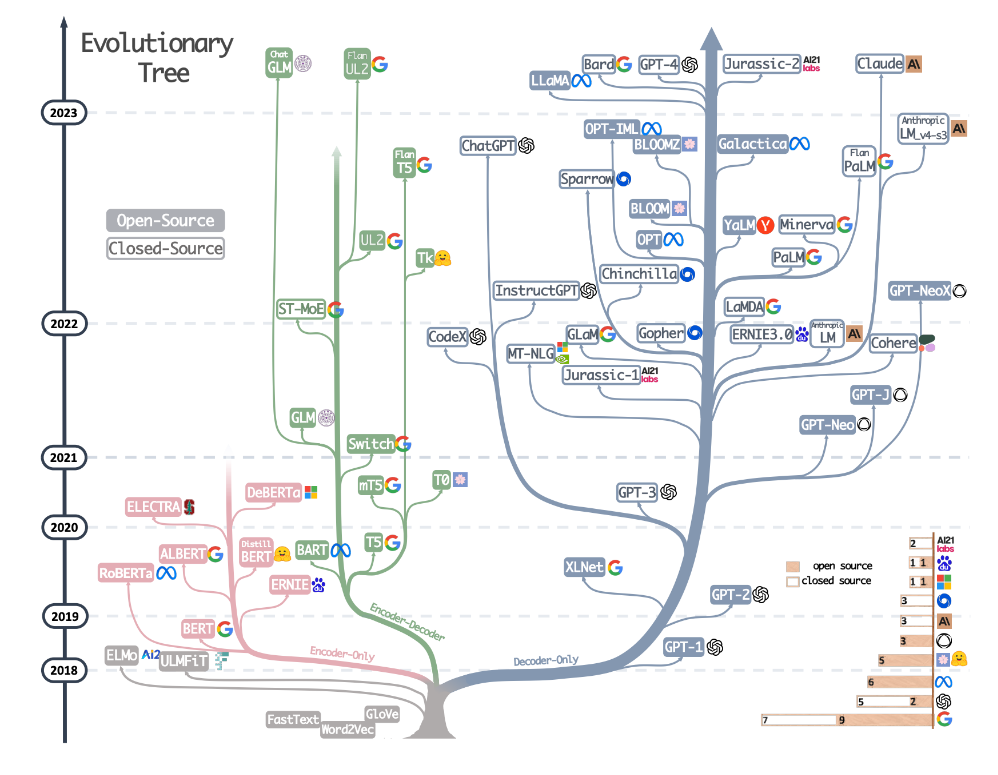

"学习Transformer并不只是学习一个算法,而是学习以Transformer为核心的一整个、基于注意力机制的大体系。在NLP领域中,有这样一张著名的树状图,它展示了从2018年到2023年的各种基于Transformer架构的语言模型的发展历程,并将模型从“开源/闭源”、“encoder/decoder/encoder-decoder”以及开发公司三个维度进行了划分。这个演化树很好地概述了从2018年到2023年基于Transformer架构的模型的发展脉络,让我们一步步来解读这个发展历史。\n",

|

|||

|

|

"\n",

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "f1848b75-6de4-48dd-862c-089cbfefc50e",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"- 2018年:Transformer的早期探索\n",

|

|||

|

|

"> ELMo(Embeddings from Language Models)和ULMFiT(Universal Language Model Fine-tuning)虽然不是基于Transformer架构的,但它们引入了深层双向表示和迁移学习的概念,这对后来的Transformer模型产生了重要影响。\n",

|

|||

|

|

"\n",

|

|||

|

|

"- 2019年:BERT及其衍生模型\n",

|

|||

|

|

"> BERT(Bidirectional Encoder Representations from Transformers)是一个重要的里程碑,它通过自注意力机制(self-attention)使模型能够生成上下文相关的词向量。它是首个大规模双向Transformer模型,对后续的模型产生了深远影响。\n",

|

|||

|

|

"> RoBERTa 对BERT进行了改进,通过更大的数据集和更长的训练时间提高了性能。\n",

|

|||

|

|

"> ALBERT 旨在减少模型大小的同时保持性能,通过因子分解嵌入层和跨层参数共享实现。\n",

|

|||

|

|

"\n",

|

|||

|

|

"- 2020年:多样化的模型和架构\n",

|

|||

|

|

"> T5(text-to-text Transfer Transformer)将所有文本问题转化为文本到文本的格式,这样可以用相同的模型处理不同的任务。\n",

|

|||

|

|

"> BART(Bidirectional and Auto-Regressive Transformers)结合了自编码和自回归的特点,适用于序列生成任务。\n",

|

|||

|

|

"\n",

|

|||

|

|

"- 2021年:专门化与效率优化\n",

|

|||

|

|

"> ELECTRA 通过对抗性训练和效率优化来提高模型性能。\n",

|

|||

|

|

"> DeBERTa 引入了改进的注意力机制,提高了模型对词之间关系的理解。\n",

|

|||

|

|

"\n",

|

|||

|

|

"- 2022年:大型语言模型的崛起\n",

|

|||

|

|

"> GPT-3(Generative Pre-trained Transformer 3)以其庞大的参数量和强大的生成能力成为当时最大的语言模型之一。\n",

|

|||

|

|

"> Switch-C(Switch Transformers)采用了稀疏激活,允许模型扩展到非常大的尺寸而不显著增加计算成本。\n",

|

|||

|

|

"\n",

|

|||

|

|

"- 2023年:高级应用与细化模型\n",

|

|||

|

|

"> ChatGPT 和 InstructGPT 是在GPT-3基础上针对特定应用,如聊天和指令性任务,进行了优化的模型。\n",

|

|||

|

|

"> Chinchilla 和 Gopher 表示了更高级别的语言理解和生成能力。\n",

|

|||

|

|

"> FLAN 和 mT5 针对多语言任务设计,显示了模型的国际化和多样化发展。\n",

|

|||

|

|

"\n",

|

|||

|

|

"这个演化树还展示了各个模型之间的继承关系,以及它们是否开源。模型的不断迭代和创新反映了这个领域对于理解和生成人类语言能力的不断追求。每一代模型都在数据处理能力、训练方法、应用范围以及解决特定问题的能力上做出了改进。这一进程不仅推动了NLP的边界,也为人工智能的其他领域提供了宝贵的见解。\n",

|

|||

|

|

"\n",

|

|||

|

|

"当我们踏上学习Transformer的旅程时,实际上是在拥抱一个基于注意力机制的庞大而复杂的知识体系,这远远超出了单一算法的学习。Transformer及其衍生的模型不仅仅是NLP领域的工具,更是一扇窗口,透过它我们可以观察和理解语言的深层结构和流动的信息。这一体系以其独特的处理方式——自注意力机制——为核心,它挑战了传统的序列处理观念,引领我们探索如何让机器更深入地理解文本之间的复杂关系。从Transformer的基本架构到各种先进的变体,如BERT、GPT-3等,我们将学习如何让机器通过这些模型捕捉到词与词之间的微妙联系,理解语境的全局连贯性,以及如何将这些理解转化为处理多样化任务的能力。通过学习Transformer,我们不只是在掌握一种技术,更是在探索一个不断发展的领域,这个领域正推动着人工智能的边界,塑造着未来。所以,让我们开启这一段学习之旅,不仅为了掌握一个算法,更为了深入理解这个基于注意力的丰富体系,发现其在语言、思想和技术交汇处的无限可能。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "d202eeb6-3936-4814-a773-5b992b30ace0",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"## 2 序列模型的基本思路与根本诉求"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "bfe181d2-490c-487e-89f3-aa4a7eab6d09",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"要理解Transformer模型的本质,首先我们要回归到序列数据、序列模型这些基本概念上来。序列数据是一种按照特定顺序排列的数据,它在现实世界中无处不在,例如股票价格的历史记录、语音信号、文本数据、视频数据等等,主要是按照某种特定顺序排列、且该顺序不能轻易被打乱的数据都被称之为是序列数据。序列数据有着“样本与样本有关联”的特点;对时间序列数据而言,每个样本就是一个时间点,因此样本与样本之间的关联就是时间点与时间点之间的关联。对文字数据而言,每个样本就是一个字/一个词,因此样本与样本之间的关联就是字与字之间、词与词之间的语义关联。很显然,要理解一个时间序列的规律、要理解一个完整的句子所表达的含义,就必须要理解样本与样本之间的关系。\n",

|

|||

|

|

"\n",

|

|||

|

|

"对于一般表格类数据,我们一般重点研究特征与标签之间的关联,但**在序列数据中,众多的本质规律与底层逻辑都隐藏在其样本与样本之间的关联中**,这让序列数据无法适用于一般的机器学习与深度学习算法。这是我们要创造专门处理序列数据的算法的根本原因。在深度学习与机器学习的世界中,**序列算法的根本诉求是要建立样本与样本之间的关联,并借助这种关联提炼出对序列数据的理解**。唯有找出样本与样本之间的关联、建立起样本与样本之间的根本联系,序列模型才能够对序列数据实现分析、理解和预测。\n",

|

|||

|

|

"\n",

|

|||

|

|

"在机器学习和深度学习的世界当中,存在众多经典且有效的序列模型。这些模型通过如下的方式来建立样本与样本之间的关联——\n",

|

|||

|

|

"\n",

|

|||

|

|

"- ARIMA家族算法群\n",

|

|||

|

|

"> 过去影响未来,因此未来的值由过去的值加权求和而成,以此构建样本与样本之间的关联。\n",

|

|||

|

|

"\n",

|

|||

|

|

"$$AR模型:y_t = c + w_1 y_{t-1} + w_2 y_{t-2} + \\dots + w_p y_{t-p} + \\varepsilon_t\n",

|

|||

|

|

"$$"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "91425e20-e6fd-4440-820a-6ff7ecef7b23",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"- 循环网络家族\n",

|

|||

|

|

"> 遍历时间点/样本点,将过去的时间上的信息传递存储在中间变量中,传递给下一个时间点,以此构建样本和样本之间的关联。\n",

|

|||

|

|

"\n",

|

|||

|

|

"$$RNN模型:h_t = W_{xh}\\cdot x_t + W_{hh}\\cdot h_{t-1}$$\n",

|

|||

|

|

"\n",

|

|||

|

|

"$$LSTM模型:\\tilde{C}_t = tanh(W_{xi} \\cdot x_t + W_{hi} \\cdot h_{t-1} + b_i)$$"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "93585a7c-b431-4963-8702-506947df6777",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"- 卷积网络家族\n",

|

|||

|

|

"> 使用卷积核扫描时间点/样本点,将上下文信息通过卷积计算整合到一起,以此构建样本和样本之间的关联。如下图所示,蓝绿色方框中携带权重$w$,权重与样本值对应位置元素相乘相加后生成标量,这是一个加权求和过程。\n",

|

|||

|

|

"\n",

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "78a5abd9-3453-4320-8710-8485ba9e5bc2",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"总结众多序列架构的经验,你会发现**成功的序列架构都在使用加权求和的方式来建立样本与样本之间的关联**,通过对不同时间点/不同样本点上的值进行加权求和,可以轻松构建“上下文信息的复合表示”,只要尝试着使用迭代的方式求解对样本进行加权求和的权重,就可以使算法获得对序列数据的理解。加权求和是有效的样本关联建立方式,这在整个序列算法研究领域几乎已经形成了共识。**在序列算法发展过程中,核心的问题已经由“如何建立样本之间的关联”转变为了“如何合理地对样本进行加权求和、即如何合理地求解样本加权求和过程中的权重”**。在这个问题上,Transformer给出了序列算法研究领域目前为止最完美的答案之一——**Attention is all you need,最佳权重计算方式是注意力机制**。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "b6b1d67b-5fad-4fbc-95a4-c3fd2c9844ac",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"## 1.1 注意力机制的本质"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "51544e34-867c-481b-b198-30ba20025f30",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"注意力机制是一个帮助算法辨别信息重要性的计算流程,它通过计算样本与样本之间相关性来判断**每个样本之于一个序列的重要程度**,并**给这些样本赋予能代表其重要性的权重**。很显然,注意力机制能够为样本赋予权重的属性与序列模型研究领域的追求完美匹配,Transformer正是利用了注意力机制的这一特点,从而想到利用注意力机制来进行权重的计算。\n",

|

|||

|

|

"\n",

|

|||

|

|

"> **面试考点**<br><br>\n",

|

|||

|

|

"作为一种权重计算机制、注意力机制有多种实现形式。经典的注意力机制(Attention)进行的是跨序列的样本相关性计算,这是说,经典注意力机制考虑的是序列A的样本之于序列B的重要程度。这种形式常常用于经典的序列到序列的任务(Seq2Seq),比如机器翻译;在机器翻译场景中,我们会考虑原始语言系列中的样本对于新生成的序列有多大的影响,因此计算的是原始序列的样本之于新序列的重要程度。\n",

|

|||

|

|

"<br><br>不过在Transformer当中我们使用的是“自注意力机制”(Self-Attention),这是在一个序列内部对样本进行相关性计算的方式,核心考虑的是序列A的样本之于序列A本身的重要程度。\n",

|

|||

|

|

"\n",

|

|||

|

|

"在Transformer架构中我们所使用的是自注意力机制,因此我们将重点围绕自注意力机制来展开讨论,我们将一步步揭开自注意力机制对于Transformer和序列算法的意义——"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "c1987c96-899b-4c98-a7c8-a3f4354ab6d0",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"- 首先,**为什么要判断序列中样本的重要性?计算重要性对于序列理解来说有什么意义?**\n",

|

|||

|

|

"\n",

|

|||

|

|

"在序列数据当中,每个样本对于“理解序列”所做出的贡献是不相同的,能够帮助我们理解序列数据含义的样本更为重要,而对序列数据的本质逻辑/含义影响不大的样本则不那么重要。以文字数据为例——\n",

|

|||

|

|

"\n",

|

|||

|

|

"**<center>尽管今天<font color =\"green\">下了雨</font>,但我因为<font color =\"red\">_________</font>而感到<font color =\"red\">非常开心和兴奋</font>。</center>**\n",

|

|||

|

|

"\n",

|

|||

|

|

"**<center>__________</font>,但我因为拿到了<font color =\"red\">梦寐以求的工作offer</font>而感到<font color =\"red\">非常开心和兴奋</font>。</center>**\n",

|

|||

|

|

"\n",

|

|||

|

|

"观察上面两句话,我们分别抠除了一些关键信息。很显然,第一个句子令我们完全茫然,但第二个句子虽然缺失了部分信息,但我们依然理解事情的来龙去脉。从这两个句子我们明显可以看出,不同的信息对于句子的理解有不同的意义。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "237959f4-eab2-4351-8aa7-c89244cb5d2d",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"在实际的深度学习预测任务当中也是如此,假设我们依然以这个句子为例——\n",

|

|||

|

|

"\n",

|

|||

|

|

"**<center>尽管今天<font color =\"green\">下了雨</font>,但我因为拿到了<font color =\"red\">梦寐以求的工作offer</font>而感到<font color =\"red\">非常开心和兴奋</font>。</center>**\n",

|

|||

|

|

"\n",

|

|||

|

|

"假设模型对句子进行情感分析,很显然整个句子的情感倾向是积极的,在这种情况下,“下了雨”这一部分对于理解整个句子的情感色彩贡献较小,相对来说,“拿到了梦寐以求的工作offer”和“感到非常开心和兴奋”这些部分则是理解句子传达的正面情绪的关键。因此对序列算法来说,如果更多地学习“拿到了梦寐以求的工作offer”和“感到非常开心和兴奋”这些词,就更有可能对整个句子的情感倾向做出正确的理解,就更有可能做出正确的预测。\n",

|

|||

|

|

"\n",

|

|||

|

|

"当我们使用注意力机制来分析这样的句子时,自注意力机制可能会为“开心”和“兴奋”这样的词分配更高的权重,因为这些词直接关联到句子的情感倾向。在很长一段时间内、长序列的理解都是深度学习世界的业界难题,在众多研究当中研究者们尝试着从记忆、效率、信息筛选等等方面来寻找出路,而注意力机制所走的就是一条“提效”的道路。**如果我们能够判断出一个序列中哪些样本是重要的、哪些是无关紧要的,就可以引导算法去重点学习更重要的样本,从而可能提升模型的效率和理解能力**。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "20a4906a-2812-46ad-b3e7-51cb48dc277c",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"- 第二,**那样本的重要性是如何定义的?为什么?**\n",

|

|||

|

|

"\n",

|

|||

|

|

"自注意力机制通过**计算样本与样本之间的相关性**来判断样本的重要性,在一个序列当中,如果一个样本与其他许多样本都高度相关,则这个样本大概率会对整体的序列有重大的影响。举例说明,看下面的文字——\n",

|

|||

|

|

"\n",

|

|||

|

|

"**<center>经理在会议上宣布了重大的公司<font color =\"red\">______</font>计划,员工们反应各异,但都对未来充满期待。</center>**\n",

|

|||

|

|

"\n",

|

|||

|

|

"在这个例子中,我们抠除的这个词与“公司”、“计划”、“会议”、“宣布”和“未来”等词汇都高度相关。如果我们针对这些词汇进行提问,你会发现——\n",

|

|||

|

|

"\n",

|

|||

|

|

"**公司**做了什么?<br>\n",

|

|||

|

|

"**宣布**了什么内容?<br>\n",

|

|||

|

|

"**计划**是什么?<br>\n",

|

|||

|

|

"**未来**会发生什么?<br>\n",

|

|||

|

|

"**会议**上的主要内容是什么?\n",

|

|||

|

|

"\n",

|

|||

|

|

"所有这些问题的答案都围绕着这一个被抠除的词产生。这个完整的句子是——\n",

|

|||

|

|

"\n",

|

|||

|

|

"**<center>经理在会议上宣布了重大的公司<font color =\"red\">重组</font>计划,员工们反应各异,但都对未来充满期待。</center>**\n",

|

|||

|

|

"\n",

|

|||

|

|

"被抠掉的部分是**重组**。很明显,重组这个词不仅提示了事件的性质、是整个句子的关键,而且也对其他词语的理解有着重大的影响。这个单词对于理解句子中的事件——公司正在经历重大变革,以及员工们的情绪反应——都至关重要。如果没有“重组”这个词,整个句子的意义将变得模糊和不明确,因为不再清楚“宣布了什么”以及“未来期待”是指什么。因此,“重组”这个词很明显对整个句子的理解有重大影响,而且它也和句子中的其他词语高度相关。\n",

|

|||

|

|

"\n",

|

|||

|

|

"相对的,假设我们抠掉的是——\n",

|

|||

|

|

"\n",

|

|||

|

|

"**<center>经理在会议上宣布了重大的公司<font color =\"red\">重组</font>计划,______反应各异,但都对未来充满期待。</center>**\n",

|

|||

|

|

"\n",

|

|||

|

|

"你会发现,虽然我们缺失了一些信息,但实际上这个信息并不太影响对于整体句子的理解,我们甚至可以大致推断出缺失的信息部分。这样的规律可以被推广到许多序列数据上,在序列数据中我们认为**与其他样本高度相关的样本,大概率会对序列整体的理解有重大影响。因此样本与样本之间的相关性可以用来衡量一个样本对于序列整体的重要性**。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "2e73db0e-9032-4ea5-9b6d-5d1cc19f41d7",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"- 第三,**样本的重要性(既一个样本与其他样本之间的相关性)具体是如何计算的?**"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "ed8a88d4-6b6e-4319-8d0a-0016064adc5e",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

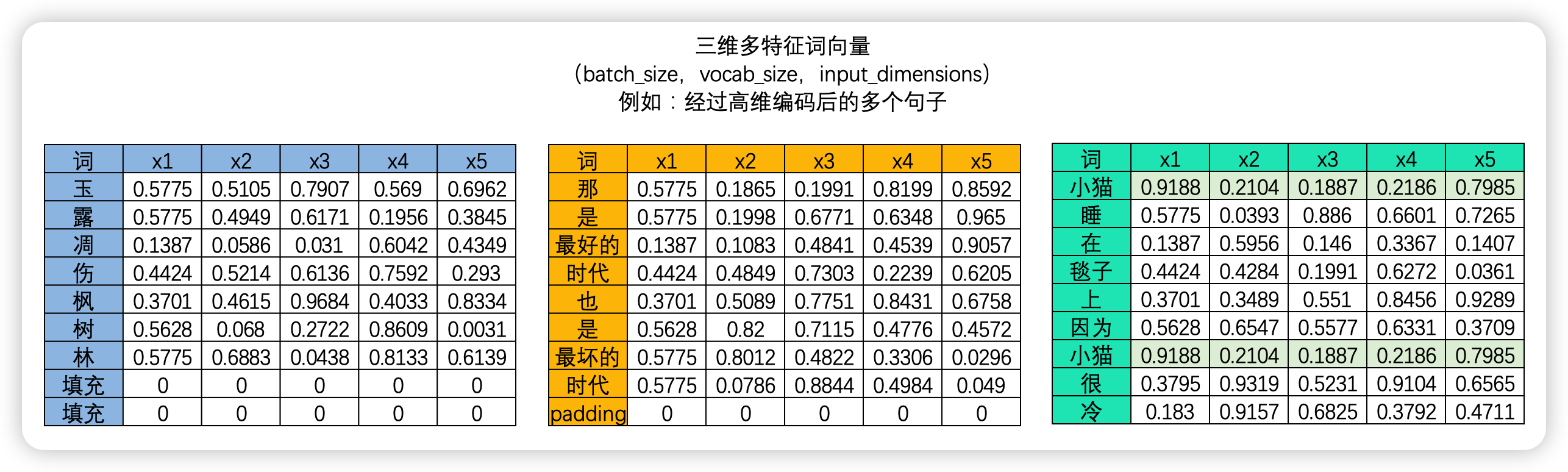

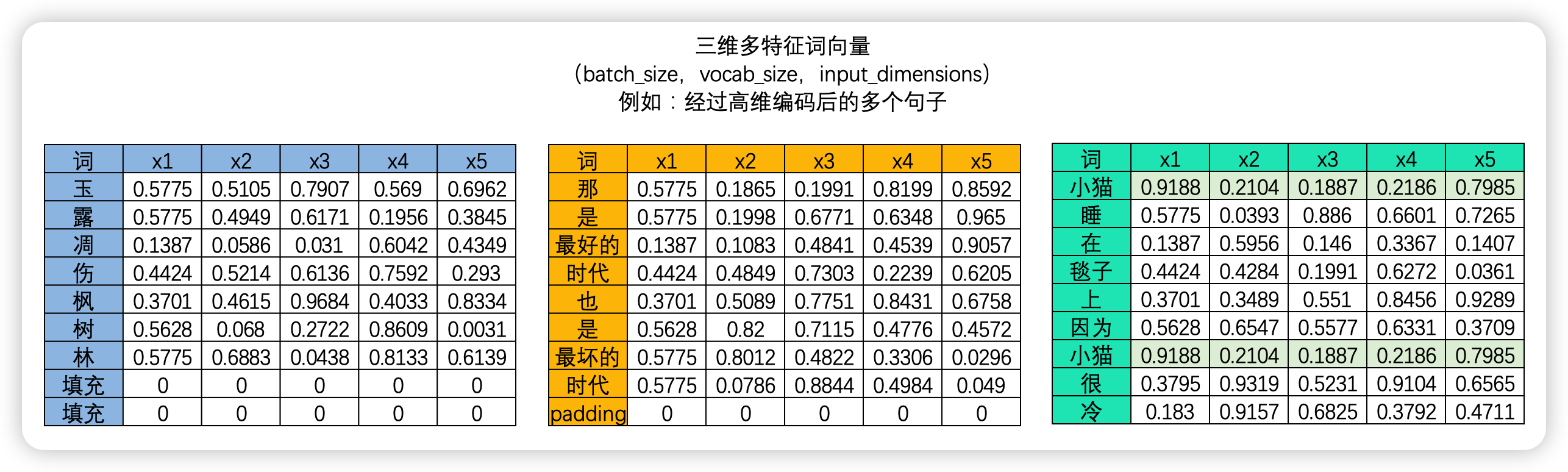

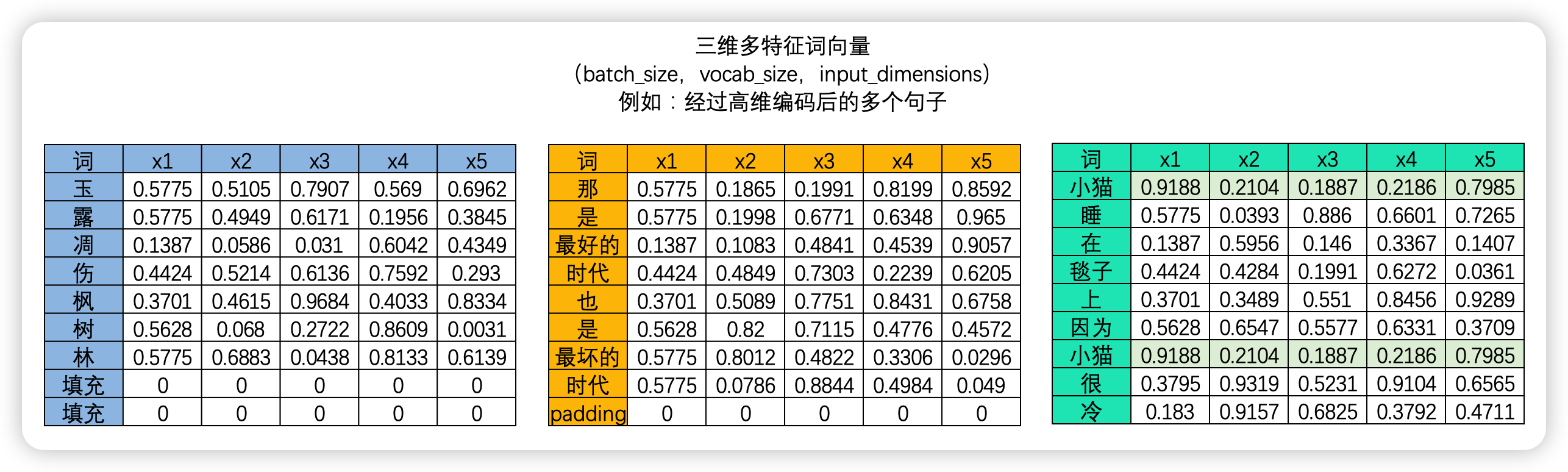

"在NLP的世界中,序列数据中的每个样本都会被编码成一个向量,其中文字数据被编码后的结果被称为词向量,时间序列数据则被编码为时序向量。\n",

|

|||

|

|

"\n",

|

|||

|

|

"\n",

|

|||

|

|

"\n",

|

|||

|

|

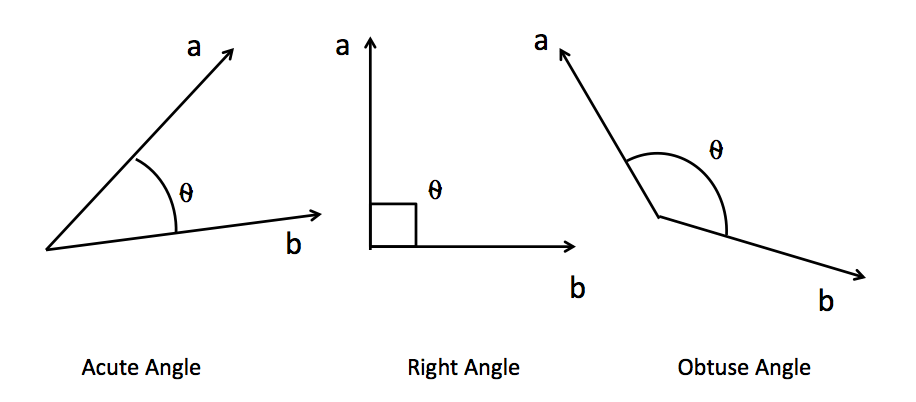

"因此,要计算样本与样本之间的相关性,本质就是计算向量与向量之间的相关性。**向量的相关性可以由两个向量的点积来衡量**。如果两个向量完全相同方向(夹角为0度),它们的点积最大,这表示两个向量完全正相关;如果它们方向完全相反(夹角为180度),点积是一个最大负数,表示两个向量完全负相关;如果它们垂直(夹角为90度或270度),则点积为零,表示这两个向量是不相关的。因此,向量的点积值的绝对值越大,则表示两个向量之间的相关性越强,如果向量的点积值绝对值越接近0,则说明两个向量相关性越弱。\n",

|

|||

|

|

"\n",

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "2c0af808-75f2-41d1-bb0b-804387fbdf0d",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"向量的点积就是两个向量相乘的过程,设有两个三维向量$\\mathbf{A}$ 和 $\\mathbf{B}$,则向量他们之间的点积可以具体可以表示为:"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "b06c7ae5-7f41-41d1-b593-eb001c49dcf7",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"$$\n",

|

|||

|

|

"\\mathbf{A} \\cdot \\mathbf{B}^T = \\begin{pmatrix}\n",

|

|||

|

|

"a_1, a_2, a_3\n",

|

|||

|

|

"\\end{pmatrix} \\cdot\n",

|

|||

|

|

"\\begin{pmatrix}\n",

|

|||

|

|

"b_1 \\\\\n",

|

|||

|

|

"b_2 \\\\\n",

|

|||

|

|

"b_3\n",

|

|||

|

|

"\\end{pmatrix} = a_1 \\cdot b_1 + a_2 \\cdot b_2 + a_3 \\cdot b_3\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"\n",

|

|||

|

|

"相乘的结构为(1,3) y (3,1) = (1,1),最终得到一个标量。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "e3b78b7d-7018-4ead-8eea-3a5b003a59b2",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"在NLP的世界当中,我们所拿到的词向量数据或时间序列数据一定是具有多个样本的。我们需要求解**样本与样本两两之间的相关性**,综合该相关性分数,我们才能够计算出一个样本对于整个序列的重要性。在这里需要注意的是,在NLP的领域中,样本与样本之间的相关性计算、即向量的之间的相关性计算会受到向量顺序的影响。**这是说,以一个单词为核心来计算相关性,和以另一个单词为核心来计算相关性,会得出不同的相关程度,向量之间的相关性与顺序有关**。举例说明:\n",

|

|||

|

|

"\n",

|

|||

|

|

"假设我们有这样一个句子:**我爱小猫咪。**\n",

|

|||

|

|

"\n",

|

|||

|

|

"> - 如果以\"我\"字作为核心词,计算“我”与该句子中其他词语的相关性,那么\"爱\"和\"小猫咪\"在这个上下文中都非常重要。\"爱\"告诉我们\"我\"对\"小猫咪\"的感情是什么,而\"小猫咪\"是\"我\"的感情对象。这个时候,\"爱\"和\"小猫咪\"与\"我\"这个词的相关性就很大。\n",

|

|||

|

|

"\n",

|

|||

|

|

"> - 但是,如果我们以\"小猫咪\"作为核心词,计算“小猫咪”与该剧自中其他词语的相关性,那么\"我\"的重要性就没有那么大了。因为不论是谁爱小猫咪,都不会改变\"小猫咪\"本身。这个时候,\"小猫咪\"对\"我\"这个词的上下文重要性就相对较小。\n",

|

|||

|

|

"\n",

|

|||

|

|

"当我们考虑更长的上下文时,这个特点会变得更加显著:\n",

|

|||

|

|

"\n",

|

|||

|

|

"> - 我爱小猫咪,但妈妈并不喜欢小猫咪。\n",

|

|||

|

|

"\n",

|

|||

|

|

"此时对猫咪这个词来说,谁喜欢它就非常重要。\n",

|

|||

|

|

"\n",

|

|||

|

|

"> - 我爱小猫咪,小猫咪非常柔软。\n",

|

|||

|

|

"\n",

|

|||

|

|

"此时对猫咪这个词来说,到底是谁喜欢它就不是那么重要了,关键是它因为柔软的属性而受人喜爱。\n",

|

|||

|

|

"\n",

|

|||

|

|

"因此,假设数据中存在A和B两个样本,则我们必须计算AB、AA、BA、BB四组相关性才可以。在每次计算相关性时,作为核心词的那个词被认为是在“询问”(Question),而作为非核心的词的那个词被认为是在“应答”(Key),AB之间的相关性就是A询问、B应答的结果,AA之间的相关性就是A向自己询问、A自己应答的结果。\n",

|

|||

|

|

"\n",

|

|||

|

|

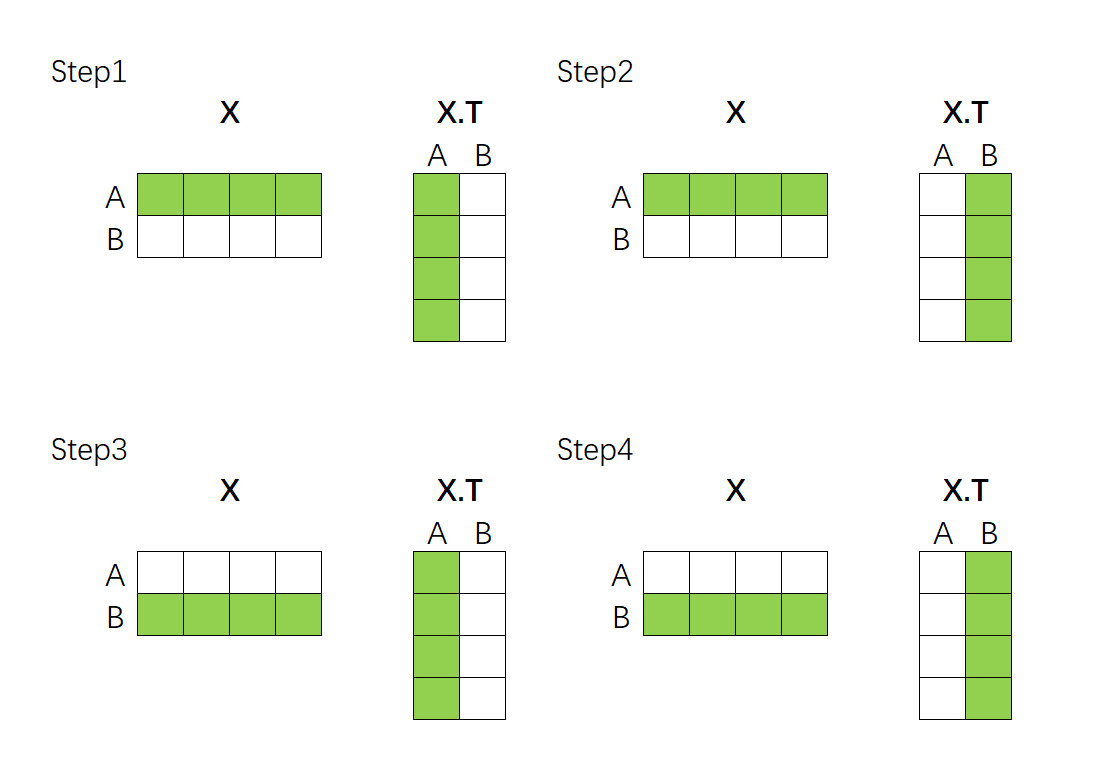

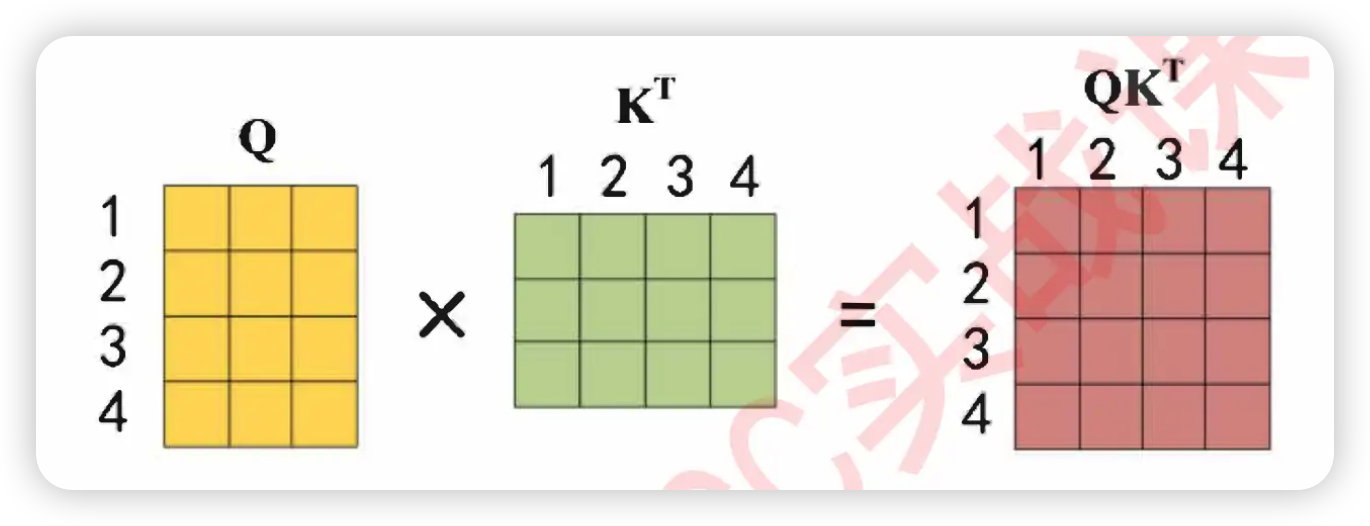

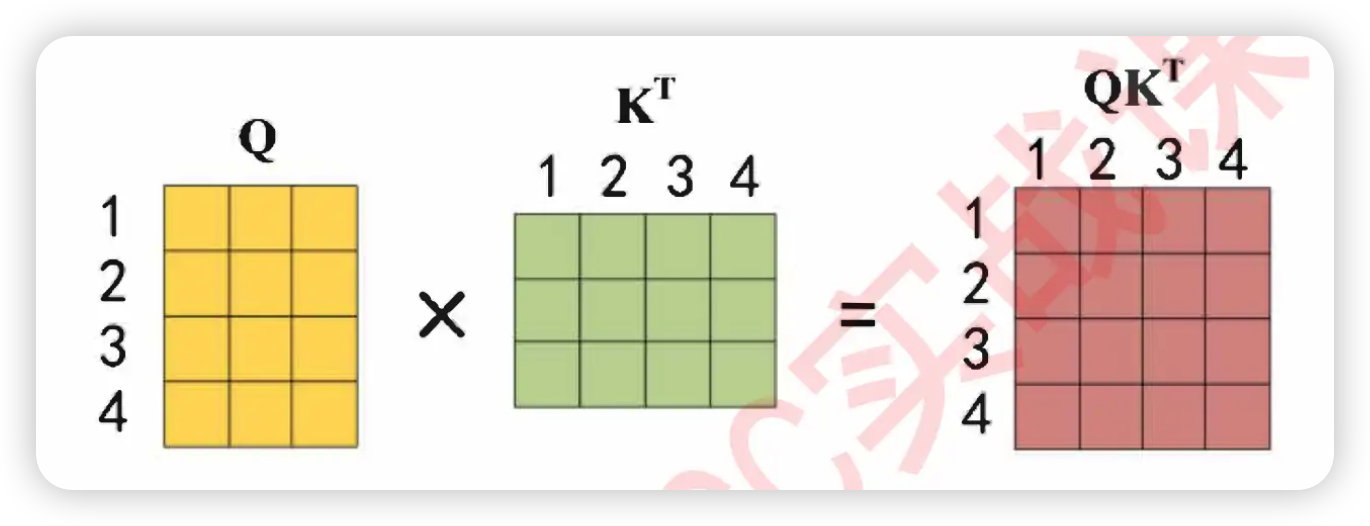

"这个过程可以通过矩阵的乘法来完成。假设现在我们的向量中有2个样本(A与B),每个样本被编码为了拥有4个特征的词向量。如下所示,如果我们要计算A、B两个向量之间的相关性,只需要让特征矩阵与其转置矩阵做点积就可以了——"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "2e41e0f7-d265-48b6-8ba6-ecbbbb51e72a",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "3aac4c51-1aeb-4dce-ab3b-423df48cb3ff",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"上述点积结果得到的最终矩阵是:\n",

|

|||

|

|

"\n",

|

|||

|

|

"$$\\begin{bmatrix}\n",

|

|||

|

|

"r_{AA} & r_{AB} \\\\\n",

|

|||

|

|

"r_{BA} & r_{BB} \n",

|

|||

|

|

"\\end{bmatrix}$$"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "4ce9d240-c861-451c-906b-b6815d981755",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"该乘法规律可以推广到任意维度的数据上,如果是带有3个样本的序列与自身的转置相乘,就会得到3y3结构的相关性矩阵,如果是n个样本的序列与自身的转置相乘,就会得到nyn结构的相关性矩阵,这些相关性矩阵代表着**这一序列当中每个样本与其他样本之间的相关性**,相关系数的个数、以及相关性矩阵的结构只与样本的数量有关,与样本的特征维度无关。因此面对任意的数据,我们只需要让该数据与自身的转置矩阵相乘,就可以自然得到**这一序列当中每个样本与其他样本之间的相关性**构成的相关性矩阵了。\n",

|

|||

|

|

"\n",

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "92b27875-074d-4aa3-a01f-4c403e1098a7",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

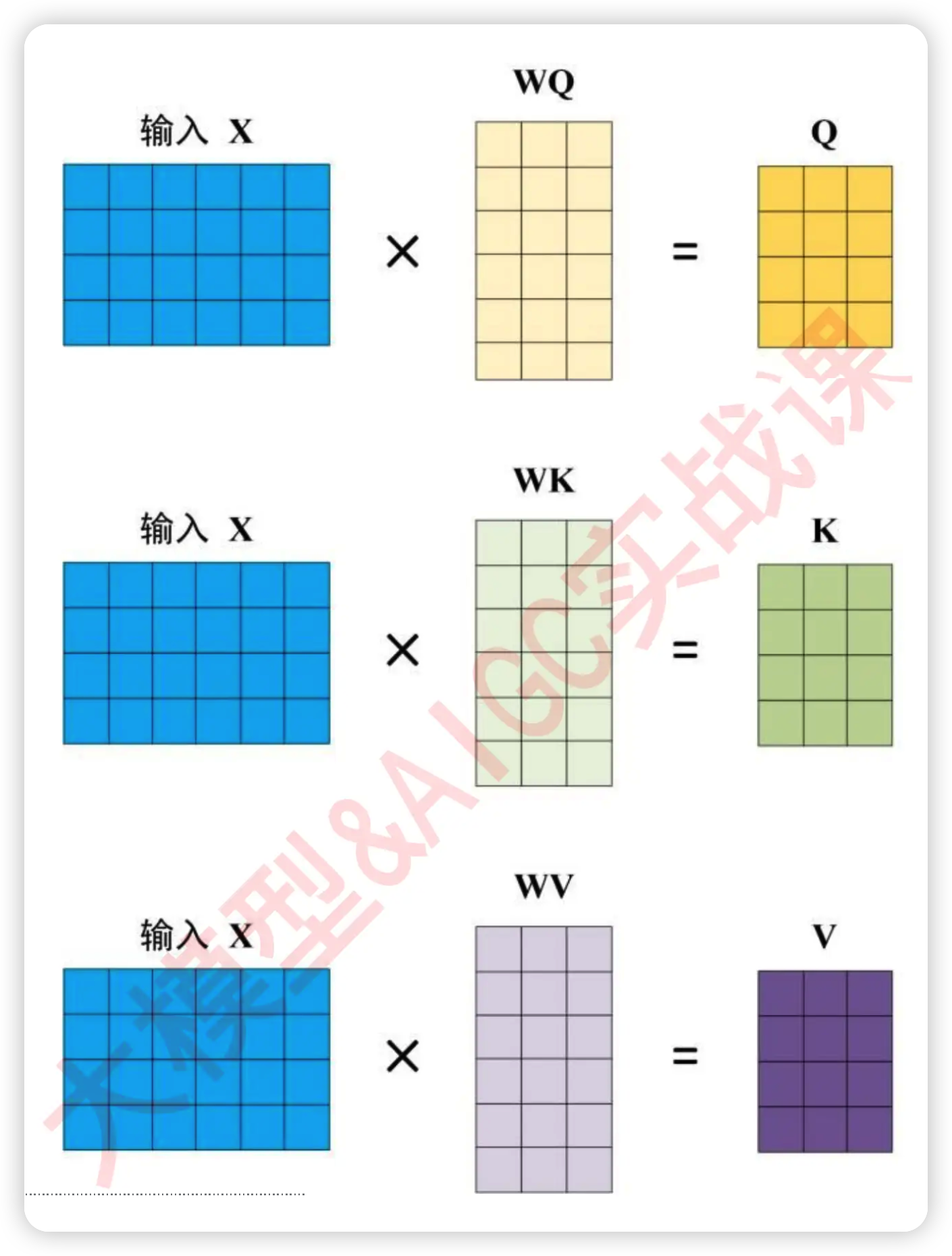

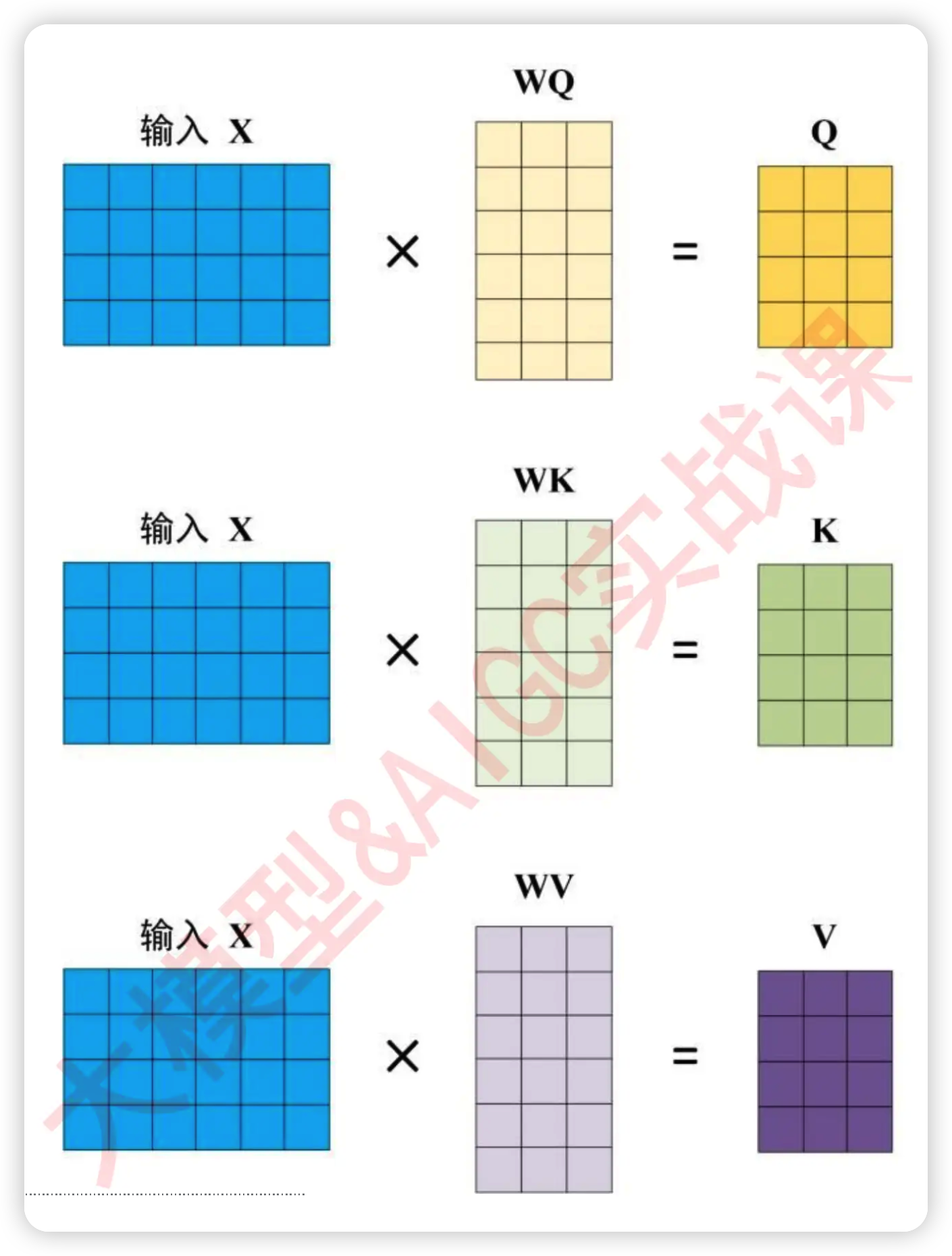

"当然,在实际计算相关性的时候,我们一般不会直接使用原始特征矩阵并让它与转置矩阵相乘,**因为我们渴望得到的是语义的相关性,而非单纯数字上的相关性**。因此在NLP中使用注意力机制的时候,**我们往往会先在原始特征矩阵的基础上乘以一个解读语义的$w$参数矩阵,以生成用于询问的矩阵Q、用于应答的矩阵K以及其他可能有用的矩阵**。\n",

|

|||

|

|

"\n",

|

|||

|

|

"在实际进行运算时,$w$是神经网络的参数,是由迭代获得的,因此$w$会依据损失函数的需求不断对原始特征矩阵进行语义解读,而我们实际的相关性计算是在矩阵Q和K之间运行的。使用Q和K求解出相关性分数的过程,就是自注意力机制的核心过程,如下图所示 ↓\n",

|

|||

|

|

"\n",

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "fecf3d55-e9bd-426d-a792-6ace51d439dc",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"到这里,我们已经将自注意力机制的内容梳理完毕了。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "43b9acd1-d216-4385-9930-47a76f3b6dd6",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"## 1.2 Transformer中的自注意力机制运算流程"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "5ebf4cf3-f7a9-49b2-bd43-fa4bc5ce0a4a",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"现在我们知道注意力机制是如何运行的了,在Transformer当中我们具体是如何使用自注意力机制为样本增加权重的呢?来看下面的流程。\n",

|

|||

|

|

"\n",

|

|||

|

|

"**Step1:通过词向量得到QK矩阵**"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "4c9fab64-b32c-44d7-9a50-da0805c0575d",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

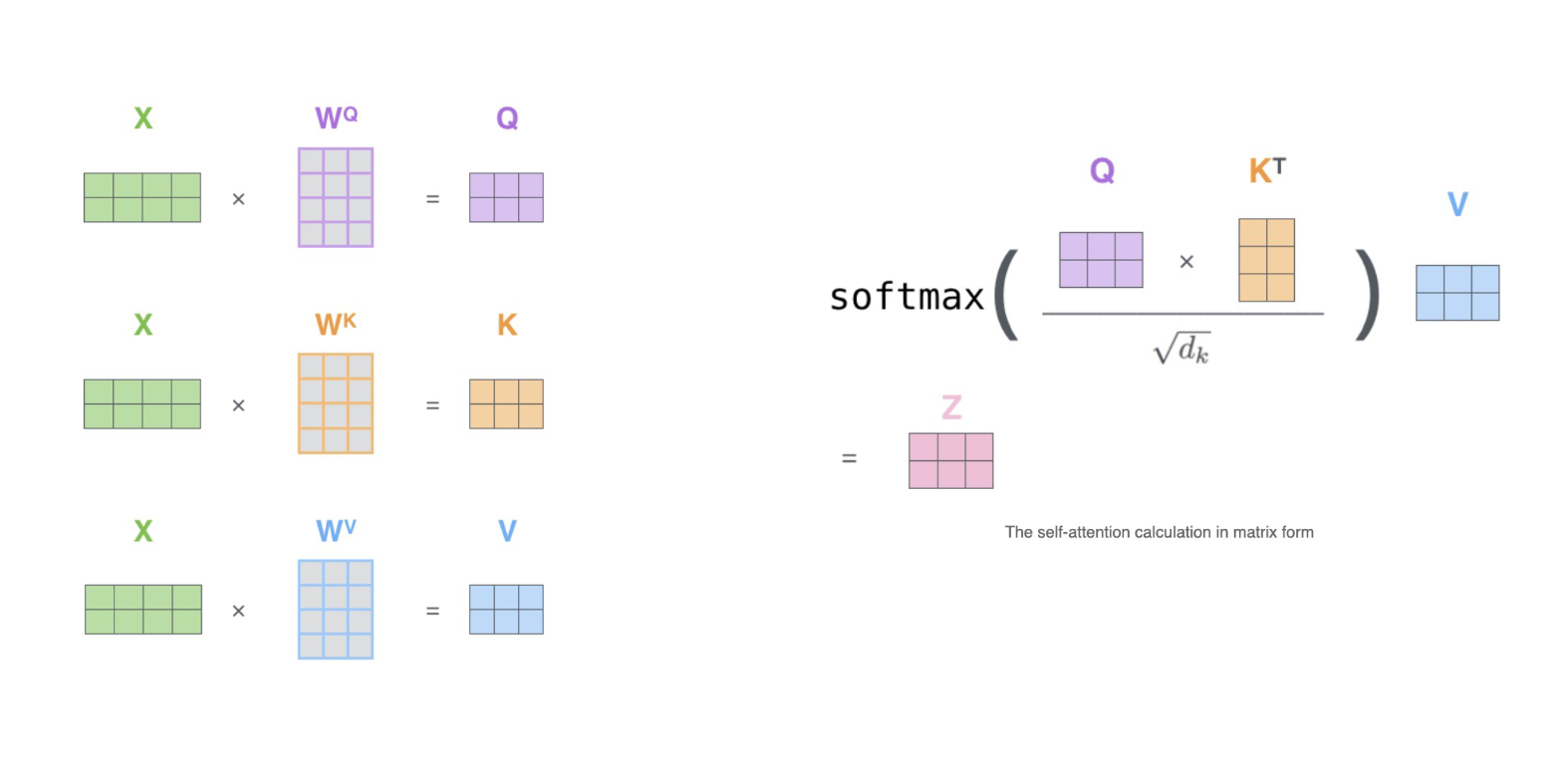

"首先,transformer当中计算的相关性被称之为是**注意力分数**,该注意力分数是在原始的注意力机制上修改后而获得的全新计算方式,其具体计算公式如下——"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "c89054cb-6fa7-43ff-ab74-1c262aec5e08",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"$$Attention(Q,K,V) = softmax(\\frac{QK^{T}}{\\sqrt{d_k}})V$$"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "79275bc4-fd44-49be-9eb8-9641fe633b11",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"在这个公式中,首先我们要先将原始特征矩阵转化为Q和K,然后令Q乘以K的转置,以获得最基础的相关性分数。同时,我们计算出权重之后,还需要将权重乘在样本上,以构成“上下文的复合表示”,因此我们还需要在原始特征矩阵基础上转化处矩阵V,用于表示原始特征所携带的信息值。假设现在我们有4个单词,每个单词被编码成了6列的词向量,那计算Q、K、V的过程如下所示:"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "4591b7a4-4e0e-4c42-87ee-1a97f6954473",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "4f2b57b9-ecde-4c20-b97b-7fab967186b1",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"其中的$W_Q$与$W_K$的结构都为(6,3),事实上我们值需要保证这两个参数矩阵能够与$y$相乘即可(即这两个参数矩阵的行数与y被编码的列数相同即可),在现代大部分的应用当中,一般$W_Q$与$W_K$都是正方形的结构。\n",

|

|||

|

|

"\n",

|

|||

|

|

"**Step2:计算$QK$相似度,得到相关性矩阵**"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "cf0b8135-1426-4534-88ad-34182403f042",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"接下来我们让Q和K的转置相乘,计算出相关性矩阵。\n",

|

|||

|

|

"\n",

|

|||

|

|

"$$Attention(Q,K,V) = softmax(\\frac{QK^{T}}{\\sqrt{d_k}})V$$\n",

|

|||

|

|

"\n",

|

|||

|

|

"$QK^{T}$的过程中,点积是相乘后相加的计算流程,因此词向量的维度越高、点积中相加的项也就会越多,因此点积就会越大。此时,词向量的维度对于相关性分数是有影响的,在两个序列的实际相关程度一致的情况下,词向量的特征维度高更可能诞生巨大的相关性分数,因此对相关性分数需要进行标准化。在这里,Transformer为相关性矩阵设置了除以$\\sqrt{d_k}$的标准化流程,$d_k$就是特征的维度,以上面的假设为例,$d_k$=6。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "a32c4e92-2bc2-46a8-a47a-fe9345aeed96",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "e677a541-7b31-4355-89f2-46a46a7f392d",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"**Step3:softmax函数归一化**\n",

|

|||

|

|

"\n",

|

|||

|

|

"将每个单词之间的相关性向量转换成[0,1]之间的概率分布。例如,对AB两个样本我们会求解出AA、AB、BB、BA四个相关性,经过softmax函数的转化,可以让AA+AB的总和为1,可以让BB+BA的总和为1。这个操作可以令一个样本的相关性总和为1,从而将相关性分数转化成性质上更接近“权重”的[0,1]之间的比例。这样做也可以控制相关性分数整体的大小,避免产生数字过大的问题。\n",

|

|||

|

|

"\n",

|

|||

|

|

"经过softmax归一化之后的分数,就是注意力机制求解出的**权重**。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "20287472-a299-44a2-92d0-3a1a5a50c40e",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"**Step4:对样本进行加权求和,建立样本与样本之间的关系**"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "e84a81cf-7d23-4481-9a7e-275d2327aed5",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "53e32837-f0d9-4094-b938-ebd57a3f9b68",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"现在我们已经获得了softmax之后的分数矩阵,同时我们还有代表原始特征矩阵值的V矩阵——"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "7966d731-6e83-447d-8e21-eb9cb8bdd817",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"$$\n",

|

|||

|

|

"\\mathbf{r} = \\begin{pmatrix}\n",

|

|||

|

|

"a_{11} & a_{12} \\\\\n",

|

|||

|

|

"a_{21} & a_{22}\n",

|

|||

|

|

"\\end{pmatrix},\n",

|

|||

|

|

"\\quad\n",

|

|||

|

|

"\\mathbf{V} = \\begin{pmatrix}\n",

|

|||

|

|

"v_{11} & v_{12} & v_{13} \\\\\n",

|

|||

|

|

"v_{21} & v_{22} & v_{23}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"二者相乘的结果如下:"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "fb4231d2-1af1-4e1c-80a8-4fcb65489715",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"$$\n",

|

|||

|

|

"\\mathbf{Z(Attention)} = \\begin{pmatrix}\n",

|

|||

|

|

"a_{11} & a_{12} \\\\\n",

|

|||

|

|

"a_{21} & a_{22}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"\\begin{pmatrix}\n",

|

|||

|

|

"v_{11} & v_{12} & v_{13} \\\\\n",

|

|||

|

|

"v_{21} & v_{22} & v_{23}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"= \\begin{pmatrix}\n",

|

|||

|

|

"(a_{11}v_{11} + a_{12}v_{21}) & (a_{11}v_{12} + a_{12}v_{22}) & (a_{11}v_{13} + a_{12}v_{23}) \\\\\n",

|

|||

|

|

"(a_{21}v_{11} + a_{22}v_{21}) & (a_{21}v_{12} + a_{22}v_{22}) & (a_{21}v_{13} + a_{22}v_{23})\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"$$"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "13f70f41-6666-42df-8446-7fd3202bbb6a",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"观察最终得出的结果,式子$a_{11}v_{11} + a_{12}v_{21}$不正是$v_{11}$和$v_{21}$的加权求和结果吗?$v_{11}$和$v_{21}$正对应着原始特征矩阵当中的第一个样本的第一个特征、以及第二个样本的第一个特征,这两个v之间加权求和所建立的关联,正是两个样本之间、两个时间步之间所建立的关联。\n",

|

|||

|

|

"\n",

|

|||

|

|

"在这个计算过程中,需要注意的是,列脚标与权重无关。因为整个注意力得分矩阵与特征数量并无关联,因此在乘以矩阵$v$的过程中,矩阵$r$其实并不关心一行上有多少个$v$,它只关心这是哪一行的v。因此我们可以把Attention写成:\n",

|

|||

|

|

"\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"\\mathbf{Z(Attention)} = \\begin{pmatrix}\n",

|

|||

|

|

"a_{11} & a_{12} \\\\\n",

|

|||

|

|

"a_{21} & a_{22}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"\\begin{pmatrix}\n",

|

|||

|

|

"v_{11} & v_{12} & v_{13} \\\\\n",

|

|||

|

|

"v_{21} & v_{22} & v_{23}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"= \\begin{pmatrix}\n",

|

|||

|

|

"(a_{11}v_{1} + a_{12}v_{2}) & (a_{11}v_{1} + a_{12}v_{2}) & (a_{11}v_{1} + a_{12}v_{2}) \\\\\n",

|

|||

|

|

"(a_{21}v_{1} + a_{22}v_{2}) & (a_{21}v_{1} + a_{22}v_{2}) & (a_{21}v_{1} + a_{22}v_{2})\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"\n",

|

|||

|

|

"很显然,对于矩阵$a$而言,原始数据有多少个特征并不重要,它始终都在建立样本1与样本2之间的联系。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "5ba8f279-efaf-479d-b0b1-c44108e35fe1",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

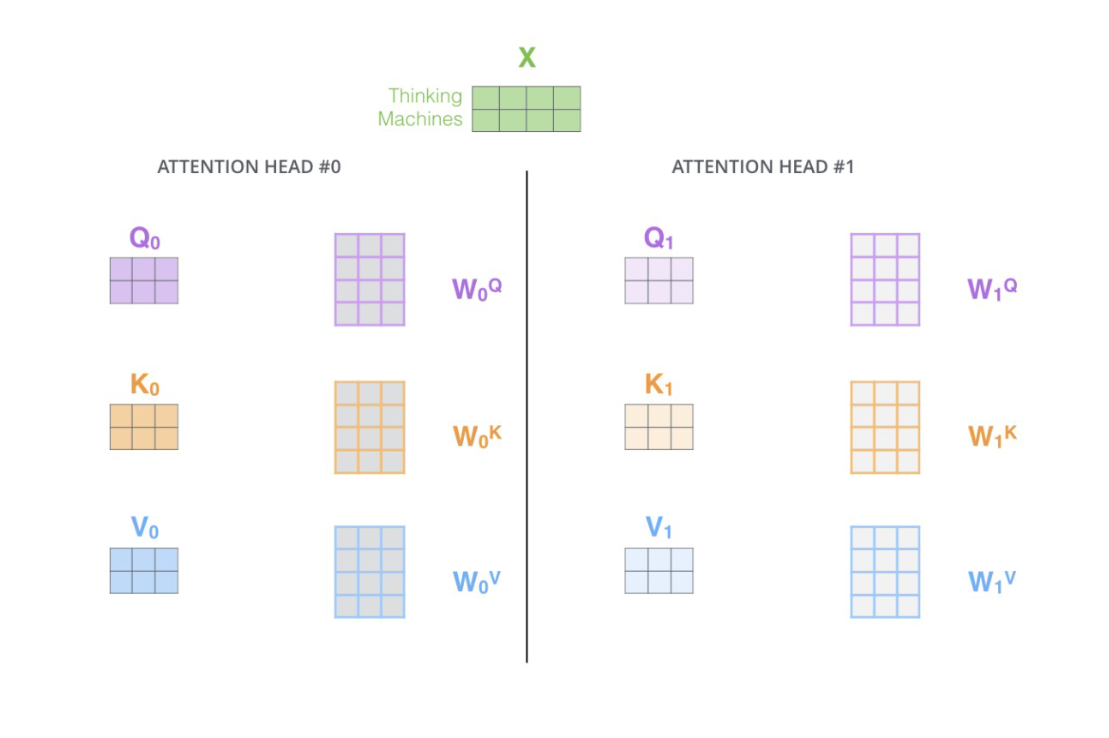

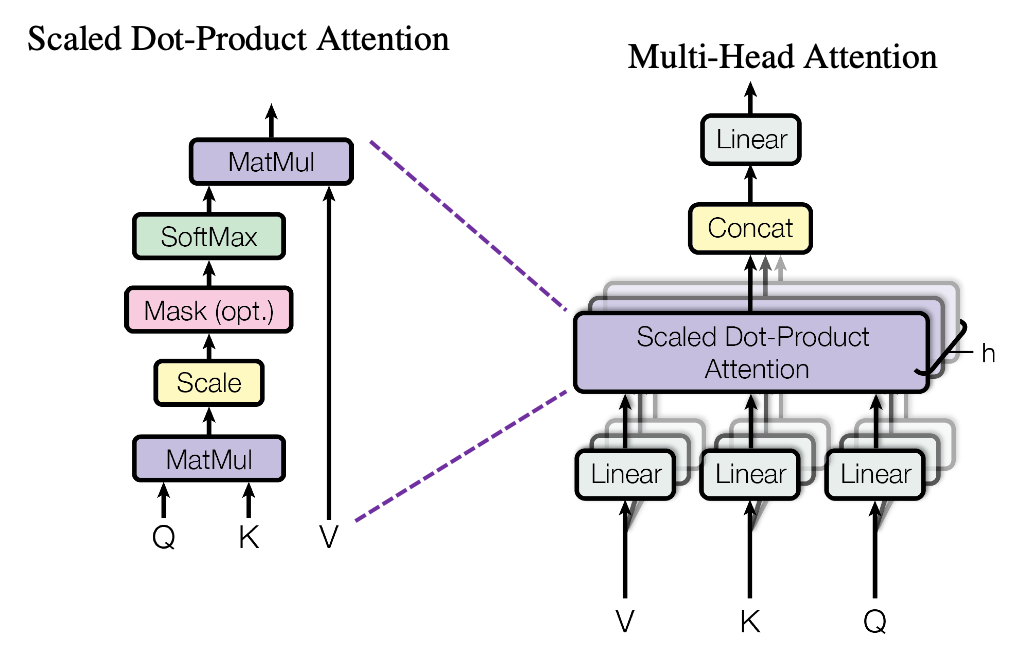

"## 1.3 Multi-Head Attention 多头注意力机制"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "0e9ab39d-e88e-4118-9110-16908d09a61b",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

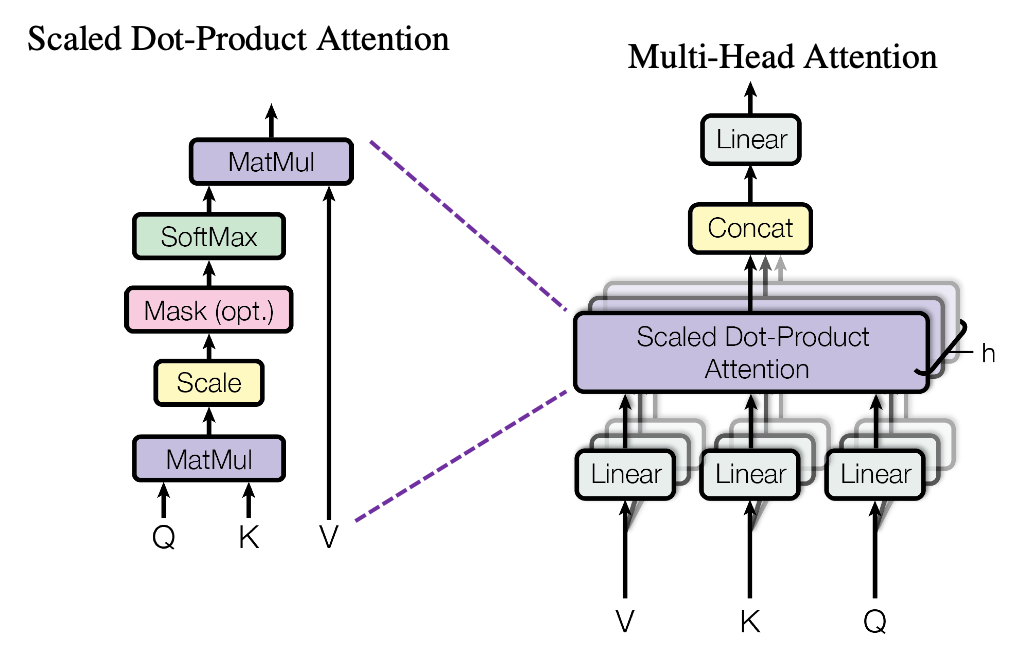

"Multi-Head Attention 就是在self-attention的基础上,对于输入的embedding矩阵,self-attention只使用了一组$W^Q,W^K,W^V$ 来进行变换得到Query,Keys,Values。而Multi-Head Attention使用多组$W^Q,W^K,W^V$ 得到多组Query,Keys,Values,然后每组分别计算得到一个Z矩阵,最后将得到的多个Z矩阵进行拼接。Transformer原论文里面是使用了8组不同的$W^Q,W^K,W^V$ 。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "68d8cc2f-16ef-4d50-9953-c11c1c3f1d32",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "d0e7f976-ad99-4e64-b4e9-2dbfdec9d23c",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"假设每个头的输出$Z_i$是一个维度为(2,3)的矩阵,如果我们有$h$个注意力头,那么最终的拼接操作会生成一个维度为(2, 3h)的矩阵。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "caa814f5-46d9-422b-879d-ad758f22519c",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"假设有两个注意力头的例子:\n",

|

|||

|

|

"\n",

|

|||

|

|

"1. 头1的输出 $ Z_1 $:\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"Z_1 = \\begin{pmatrix}\n",

|

|||

|

|

"z_{11} & z_{12} & z_{13} \\\\\n",

|

|||

|

|

"z_{14} & z_{15} & z_{16}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"\n",

|

|||

|

|

"2. 头2的输出 $ Z_2 $:\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"Z_2 = \\begin{pmatrix}\n",

|

|||

|

|

"z_{21} & z_{22} & z_{23} \\\\\n",

|

|||

|

|

"z_{24} & z_{25} & z_{26}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"\n",

|

|||

|

|

"3. 拼接操作:\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"Z_{\\text{concatenated}} = \\begin{pmatrix}\n",

|

|||

|

|

"z_{11} & z_{12} & z_{13} & z_{21} & z_{22} & z_{23} \\\\\n",

|

|||

|

|

"z_{14} & z_{15} & z_{16} & z_{24} & z_{25} & z_{26}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"$$"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "2e3af21d-caef-45a4-9e34-85546f5c7972",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"一般情况:\n",

|

|||

|

|

"\n",

|

|||

|

|

"对于$h$个注意力头,每个头的输出$Z_i$为:\n",

|

|||

|

|

"\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"Z_i = \\begin{pmatrix}\n",

|

|||

|

|

"z_{i1} & z_{i2} & z_{i3} \\\\\n",

|

|||

|

|

"z_{i4} & z_{i5} & z_{i6}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"$$"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "506ebeb2-f529-4093-b8fe-05a9fac4429d",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"总拼接操作如下:\n",

|

|||

|

|

"\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"Z_{\\text{concatenated}} = \\begin{pmatrix}\n",

|

|||

|

|

"z_{11} & z_{12} & z_{13} & z_{21} & z_{22} & z_{23} & \\cdots & z_{h1} & z_{h2} & z_{h3} \\\\\n",

|

|||

|

|

"z_{14} & z_{15} & z_{16} & z_{24} & z_{25} & z_{26} & \\cdots & z_{h4} & z_{h5} & z_{h6}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"\n",

|

|||

|

|

"最终的结构为(2,3h)。因此假设特征矩阵中,序列的长度为100,序列中每个样本的embedding维度为3,并且设置了8头注意力机制,那最终输出的序列就是(100,24)。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "7c841ff2-008b-4bde-aca2-caf563699989",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "44c706ea-07c9-426f-ba34-57114fa8e823",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"以上就是Transformer当中的自注意力层,Transformer就是在这一根本结构的基础上建立了样本与样本之间的链接。在此结构基础上,Transformer丰富了众多的细节来构成一个完整的架构。让我们现在就来看看Transformer的整体结构。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "6a4306b7-3723-471f-9d16-f5e6a0a76f91",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"让我们一起来看看Transformer算法都由哪些元素组成,以下是来自论文《All you need is Attention》的架构图:"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "8db1c453-7eed-4cd4-b0a4-60e74b320627",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"<center><img src=\"https://machinelearningmastery.com/wp-content/uploads/2021/08/attention_research_1.png\" alt=\"描述文字\" width=\"400\">"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "f1ebbc78-282b-46d1-90b6-39b040fe81a3",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"Transformer的总体架构主要由两大部分构成:编码器(Encoder)和解码器(Decoder)。在Transformer中,编码是解读数据的结构,在NLP的流程中,编码器负责解构自然语言、将自然语言转化为计算机能够理解的信息,并让计算机能够学习数据、理解数据;而解码器是将被解读的信息“还原”回原始数据、或者转化为其他类型数据的结构,它可以让算法处理过的数据还原回“自然语言”,也可以将算法处理过的数据直接输出成某种结果。因此在transformer中,编码器负责接收输入数据、负责提取特征,而解码器负责输出最终的标签。当这个标签是自然语言的时候,解码器负责的是“将被处理后的信息还原回自然语言”,当这个标签是特定的类别或标签的时候,解码器负责的就是“整合信息输出统一结果”。\n",

|

|||

|

|

"\n",

|

|||

|

|

"在信息进入解码器和编码器之前,我们首先要对信息进行**Embedding和Positional Encoding两种编码**,这两种编码在实际代码中表现为两个单独的层,因此这两种编码结构也被认为是Transformer结构的一部分。经过编码后,数据会进入编码器Encoder和解码器decoder,其中编码器是架构图上左侧的部分,解码器是架构图上右侧的部分。\n",

|

|||

|

|

"\n",

|

|||

|

|

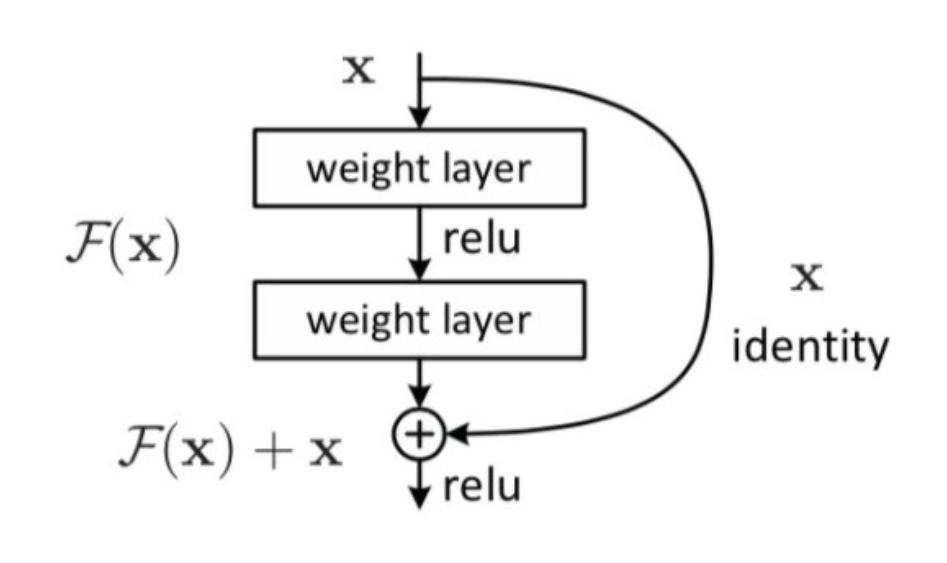

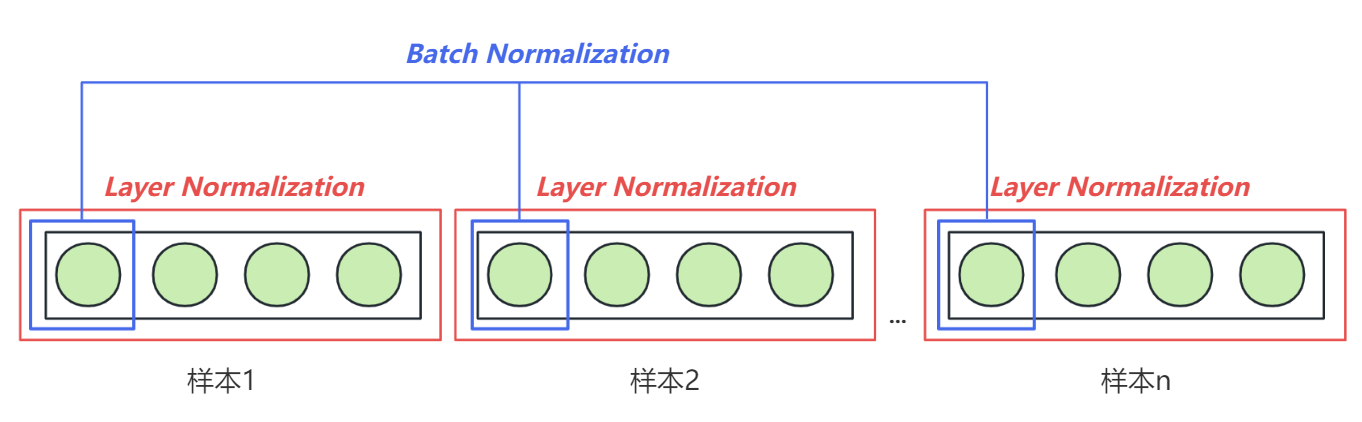

"**编码器(Encoder)结构包括两个子层:一个是多头的自注意力(Self-Attention)层,另一个是前馈(Feed-Forward)神经网络**。输入数据会先经过自注意力层,这层的作用是为输入数据中不同的信息赋予重要性的权重、让模型知道哪些信息是关键且重要的。接着,这些信息会经过前馈神经网络层,这是一个简单的全连接神经网络,用于将多头注意力机制中输出的信息进行整合。两个子层都被武装了一个残差连接(Residual Connection),这两个层输出的结果都会有残差链接上的结果相加,再经过一个层标准化(Layer Normalization),才算是得到真正的输出。在神经网络中,多头注意力机制+前馈网络的结构可以有很多层,在Transformer的经典结构中,encoder结构重复了6层。\n",

|

|||

|

|

"\n",

|

|||

|

|

"**解码器(Decoder)也是由多个子层构成的:第一个也是多头的自注意力层(此时由于解码器本身的性质问题,这里的多头注意力层携带掩码),第二个子层是普通的多头注意力机制层,第三个层是前馈神经网络**。自注意力层和前馈神经网络的结构与编码器中的相同。注意力层是用来关注编码器输出的。同样的,每个子层都有一个残差连接和层标准化。在经典的Transformer结构中,Decoder也有6层。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "9c6f2bbc-7263-4fcd-88ef-6997bdde5f56",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"**这个结构看似简单,但其实奥妙无穷,这里有许多的问题等待我们去挖掘和探索**。现在就让我们从解码器部分开始逐一解读transformer结构。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "bad3022c-e161-462f-bdfe-23b59d0fb43a",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"<center><img src=\"https://skojiangdoc.oss-cn-beijing.aliyuncs.com/2023DL/transformer/image-1.png\" alt=\"描述文字\" width=\"400\">"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "3358bb14-46ce-4556-b974-5e8fd9160c6a",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"## 2.1 Embedding层与位置编码技术"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "e4884c4a-5e1f-409e-8937-189aebbacb31",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"在Transformer中,embedding层位于encoder和decoder之前,主要负责进行语义编码。Embedding层将离散的词汇或符号转换为连续的高维向量,使得模型能够处理和学习这些向量的语义关系。通过嵌入表示,输入的序列可以更好地捕捉到词与词之间的相似性和关系。此外,在输入到编码器和解码器之前,通常还会添加位置编码(Positional Encoding),因为Transformer没有内置的序列顺序信息,也就是说Attention机制本身会带来**位置信息的丧失**。\n",

|

|||

|

|

"\n",

|

|||

|

|

"- **首先,位置信息为什么重要?它可以从哪里来?**\n",

|

|||

|

|

"\n",

|

|||

|

|

"首先,位置信息就是顺序的信息,字符排列的顺序会影响语句的理解(还记得“屡战屡败”和“屡败屡战”的例子吗?同样的词在句子不同的地方出现,也可能会有不同的含义),我们说Transformer丧失了位置信息,意思是transformer并不理解样本与样本之间是按照什么顺序排列的(也就是不知道样本在序列中具体的位置)。\n",

|

|||

|

|

"\n",

|

|||

|

|

"还记得RNN和LSTM是如何处理数据的吗?RNN和LSTM以序列的方式处理输入数据,即一个时间步一个时间步地处理输入序列的每个元素。每个时间步的隐藏状态依赖于前一个时间步的隐藏状态。这种机制天然地捕捉了序列的顺序信息。由于RNN和LSTM在处理序列时会保留前一时间步的信息并传递到下一时间步,所以它们能够内在地理解和处理序列的时间依赖关系和顺序信息。然而,与RNN和LSTM不同,Transformer并不以序列的方式逐步处理输入数据,而是一次性处理整个序列。Attention能够通过点积的方式一次性计算出所有向量之间的相关性、并且多头注意力机制中不同的头还可以并行,因此Attention与Transformer缺乏天然的顺序信息。\n",

|

|||

|

|

"\n",

|

|||

|

|

"- **相关性计算过程中有标识,这些标识不能够成为位置信息吗?什么信息才算是位置信息/顺序信息呢?**\n",

|

|||

|

|

"\n",

|

|||

|

|

"在注意力机制中,权重矩阵$softmax(\\frac{QK^{T}}{\\sqrt{d_k}})$中的每个元素$a_{ij}$表示序列中位置$i$和位置$j$之间的相关性,但是却并没有假设这两个相关的元素之间的位置信息。具体来说,虽然我们使用了1、2这样的脚标,但Attention实际在进行计算的时候,只会认知两个具体的相关性数字,并没有显性地认知到脚标——\n",

|

|||

|

|

"\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"\\mathbf{Z(Attention)} = \\begin{pmatrix}\n",

|

|||

|

|

"a_{11} & a_{12} \\\\\n",

|

|||

|

|

"a_{21} & a_{22}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"\\begin{pmatrix}\n",

|

|||

|

|

"v_{11} & v_{12} & v_{13} \\\\\n",

|

|||

|

|

"v_{21} & v_{22} & v_{23}\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"= \\begin{pmatrix}\n",

|

|||

|

|

"(a_{11}v_{11} + a_{12}v_{21}) & (a_{11}v_{12} + a_{12}v_{22}) & (a_{11}v_{13} + a_{12}v_{23}) \\\\\n",

|

|||

|

|

"(a_{21}v_{11} + a_{22}v_{21}) & (a_{21}v_{12} + a_{22}v_{22}) & (a_{21}v_{13} + a_{22}v_{23})\n",

|

|||

|

|

"\\end{pmatrix}\n",

|

|||

|

|

"$$\n",

|

|||

|

|

"\n",

|

|||

|

|

"由于Transformer模型放弃了“逐行对数据进行处理”的方式,而是一次性处理一整张表单,因此它不能直接像循环神经网络RNN那样在训练过程中就捕捉到单词与单词之间的位置信息。在经典的深度学习场景当中,最典型的顺序信息就是**数字的大小**。由于数字天生是带有大小顺序的,因此数字本身可以被认为是含有顺序一个信息,只要让有顺序的信息和有顺序的数字相匹配,就可以让算法天然地认知到相应的顺序。因此我们自然而然地**想要对样本的位置本身进行“编码”**,利用数字本身自带的顺序来告知Transformer。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "36bcbdda-2068-4b41-a958-65bd1fb4254e",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"- **位置信息如何被告知给Attention/Transformer这样的算法?**"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "ad08a935-f6bc-4698-b34f-de5c385545d9",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

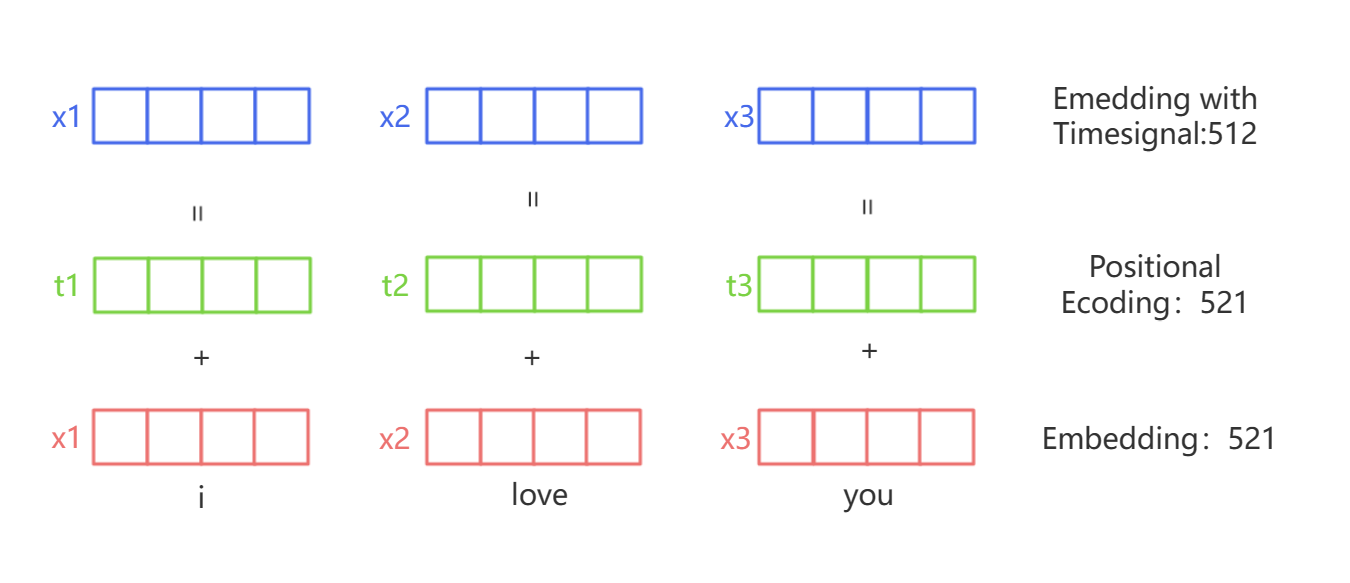

"为了解决位置信息的问题,Transformer引入了位置编码(positional encoding)技术来补充语义词嵌入。我们首先将样本的位置转变成相应的数字或向量,然后让位置编码的这个向量被加到原有的词嵌入向量embedding向量上,这样模型就可以同时知道一个词的语义和它在句子中的位置。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "12e9d14a-4d3b-4c9f-9e95-c91d11d0aa6e",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "7fa0573c-3537-4a8d-a481-799ba78990a4",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"(上图中的数字编号有误,无论是embedding、postion encoding还是最终加和的结果,都应该等于512,521是错误的表示)"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "a1aca67e-4fcd-4173-8791-209376d5426f",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"位置编码使用了一种特殊的函数,这个函数会为序列中的每个位置生成一个向量。对于一个特定的位置,这个函数生成的向量在所有维度上的值都是不同的。这保证了每个位置的编码都是唯一的,而且不同位置的编码能够保持一定的相对关系。**在transformer的位置编码中,我们需要对每个词的每个特征值给与位置编码,所有这些特征位置的编码共同组合成了一个样本的位置编码**。例如,当一个样本拥有4个特征时,我们的位置编码也会是包含4个数字的一个向量,而不是一个单独的编码。因此,**位置编码矩阵是一个与embedding后的矩阵结构相同的矩阵**。\n",

|

|||

|

|

"\n",

|

|||

|

|

"在Transformer模型中,词嵌入和位置编码被相加,然后输入到模型的第一层。这样,Transformer就可以同时处理词语的语义和它在句子中的位置信息。这也是Transformer模型在处理序列数据,特别是自然语言处理任务中表现出色的一个重要原因。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "c5feef31-b541-4e79-868f-429d7201ad82",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"- **Transformer中的正余弦位置编码**"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "2f24d71b-c0d6-4a51-ab75-242b3e5eada3",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"在过去最为经典的位置编码就是OrdinalEncoder顺序编码,但在Transformer中我们需要的是一个编码向量,而非单一的编码数字,因此OrdinalEncoder编码就不能使用了。在众多的、构成编码向量的方式中,Transformer选择了“**正余弦编码**”这一特别的方式。让我们一起来看看正余弦编码的含义——"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "23c62d58-c6e7-478e-bc02-d838e2c9453e",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

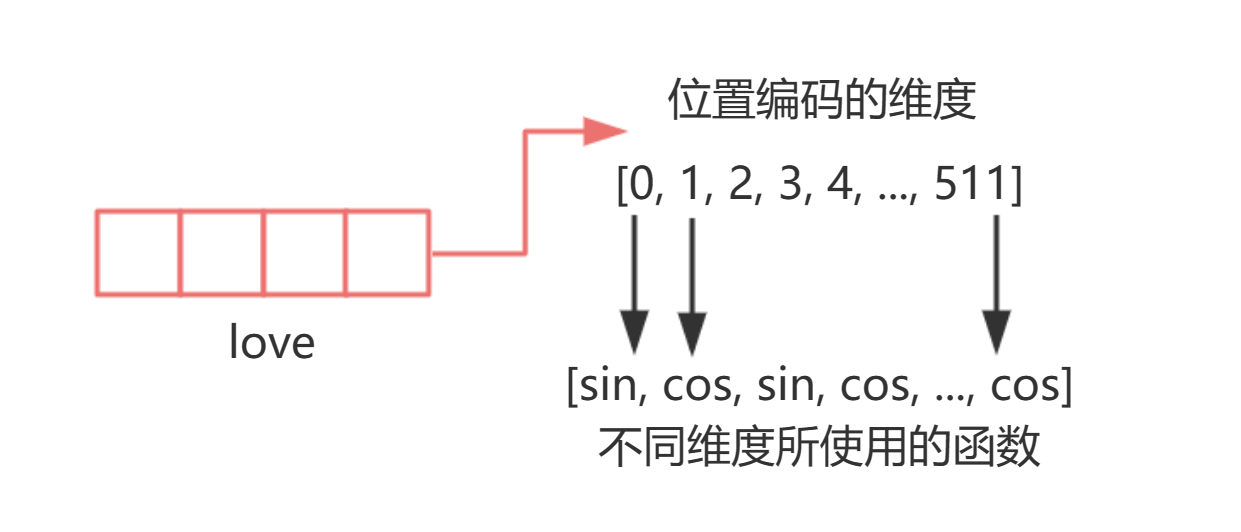

"首先,正余弦编码是使用正弦函数和余弦函数来生成具体编码值的编码方式。对于任意的词向量(也就是数据中的一个样本),正余弦编码在偶数维度上采用了sin函数来编码,奇数维度采用了cos函数来编码,sin函数与cos函数交替使用,最终构成一个多维度的向量——\n",

|

|||

|

|

"\n",

|

|||

|

|

"\n",

|

|||

|

|

""

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "208c74eb-6174-401c-8928-a8a06a8ea3de",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"通过对不同的维度进行不同的三角函数编码,来构成一串独一无二的编码组合。这种编码组合与embedding类似,都是将信息投射到一个高维空间当中,只不过正余弦编码是将样本的位置信息(也就是样本的索引)投射到高维空间中,且每一个特征的维度代表了这个高维空间中的一维度。对正余弦编码来说,编码数字本身是依赖于**样本的位置信息(索引)、所有维度的编号、以及总维度数三个因子**计算出来的。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "26b91d7d-3416-4606-a5ed-ea65109a2a5e",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"具体来看,正余弦编码的公式如下:\n",

|

|||

|

|

"\n",

|

|||

|

|

"- 正弦位置编码(Sinusoidal Positional Encoding)\n",

|

|||

|

|

"$$PE_{(pos, 2i)} = \\sin \\left( \\frac{pos}{10000^{\\frac{2i}{d_{\\text{model}}}}} \\right) $$\n",

|

|||

|

|

"\n",

|

|||

|

|

"- 余弦位置编码(Cosine Positional Encoding)\n",

|

|||

|

|

"$$ PE_{(pos, 2i+1)} = \\cos \\left( \\frac{pos}{10000^{\\frac{2i}{d_{\\text{model}}}}} \\right) $$\n",

|

|||

|

|

"\n",

|

|||

|

|

"将这段LaTey代码粘贴到支持LaTey的环境中(如LaTey编辑器或支持LaTey的Markdown渲染器)即可得到公式的正确显示。\n",

|

|||

|

|

"\n",

|

|||

|

|

"其中——\n",

|

|||

|

|

"> - pos代表样本在序列中的位置,也就是样本的索引(是三维度中的seq_len/vocal_size/time_step这个维度上的索引)<br><br>\n",

|

|||

|

|

"> - $2i$和$2i+1$分别代表embedding矩阵中的偶数和奇数维度索引,当我们让i从0开始循环增长时,可以获得[0,1,2,3,4,5,6...]这样的序列。<br><br>\n",

|

|||

|

|

"> - $d_{\\text{model}} $ 代表embedding后矩阵的总维度。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "d327b655-f508-41f7-a860-61d3c787cf00",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"在这里,你可以选择停下脚步、开启下一节课程,你也可以选择继续听更深入的关于位置编码的内容。这里有选择的原因是,位置编码作为深度学习和时间序列处理过程中非常重要的一种技术,在不同的场景下被频繁地使用,我们可以将其用于纹理建模、声音处理、信号处理、震动分析等多种场合,但同时,我们也将它作为一种行业惯例在进行使用,因此你或许无需对正余弦位置编码进行特别深入的探索。\n",

|

|||

|

|

"\n",

|

|||

|

|

"但正余弦位置编码本身是一种非常奇妙的结构,在接下来的内容中,我将带你仔细剖析正余弦位置编码的诸多细节和意义。"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "5ab6b2c2-dcb6-4006-9b2a-0f13ab1a087e",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"- **为什么要使用正余弦编码?它有什么意义?**"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "b40f6119-02b4-4ff5-bae1-fe08e9be6c2f",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"- 正弦位置编码(Sinusoidal Positional Encoding)\n",

|

|||

|

|

"$$PE_{(pos, 2i)} = \\sin \\left( \\frac{pos}{10000^{\\frac{2i}{d_{\\text{model}}}}} \\right) $$\n",

|

|||

|

|

"\n",

|

|||

|

|

"- 余弦位置编码(Cosine Positional Encoding)\n",

|

|||

|

|

"$$ PE_{(pos, 2i+1)} = \\cos \\left( \\frac{pos}{10000^{\\frac{2i}{d_{\\text{model}}}}} \\right) $$"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "f0d19138-d827-425a-953d-de8170bdc39e",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"首先我们先来看Pos,Pos是样本的索引,它有多大会取决于实际的数据尺寸。如果一个时间序列或文本数据是很长的序列,那Pos数值本身也会变得很大。假设我们使用很大的数值与原本的embedding序列相加,那位置编码带来的影响可能会远远超过原始的语义、会导致喧宾夺主的问题,因此我们天然就有限制位置编码的大小的需求。在这个角度来看,使用sin和cos这样值域很窄的函数、就能够很好地限制位置编码地大小。\n",

|

|||

|

|

"\n",

|

|||

|

|

"**<center>正余弦编码的意义①:sin和cos函数值域有限,可以很好地限制位置编码的数字大小。**\n",

|

|||

|

|

"\n",

|

|||

|

|

"假设我们使用的是单变量序列,那我们或许只需要sin(pos)或者cos(pos)看起来就足够了,但为了给每个不同的维度都进行编码,我们肯定还要做点儿别的文章。首先,位置信息和语义信息一样,当我们将其投射到高维空间时,我们也在尝试用不同的维度来解读位置信息。但我们使用正弦余弦这样的三角函数时,如何能够将信息投射到不同的维度呢——答案是创造各不相同的sin和cos函数。虽然都是正弦/余弦函数,但我们可以为函数设置不同的频率来获得各种高矮胖瘦的函数——"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "code",

|

|||

|

|

"execution_count": 58,

|

|||

|

|

"id": "e0c806bb-5df1-4b47-bb2c-2f4bcbc09e4f",

|

|||

|

|

"metadata": {},

|

|||

|

|

"outputs": [

|

|||

|

|

{

|

|||

|

|

"data": {

|

|||

|

|

"image/png": "iVBORw0KGgoAAAANSUhEUgAAA1gAAAKACAYAAACBhdleAAAAOXRFWHRTb2Z0d2FyZQBNYXRwbG90bGliIHZlcnNpb24zLjguMiwgaHR0cHM6Ly9tYXRwbG90bGliLm9yZy8g+/7EAAAACXBIWXMAAAsTAAALEwEAmpwYAAEAAElEQVR4nOzdd1xUV/r48c+hg6AiIKCoFCmKCPbeTTU9MWuSTWLMbjZtk2zKJrvZb5LNZjfZX7Kbnmx63/TeEzVq1IgVGwKCgiIdVHqd8/tjwEUFacPcO8Pzfr18RZiZe5+Z4MN97jnnOUprjRBCCCGEEEKInnMxOgAhhBBCCCGEcBZSYAkhhBBCCCGEjUiBJYQQQgghhBA2IgWWEEIIIYQQQtiIFFhCCCGEEEIIYSNSYAkhhBBCCCGEjUiBJUxNKTVLKZVudBxCiL5Bco4Qwl4k3zgvKbDEKSmlspVSNUqpylZ/hvTi+bRSamTL11rrn7XWsb10rheVUulKKYtSamlvnEMI0TXOmnOUUjFKqc+VUsVKqTKl1PdKqV7JbUKIznHifBOolFqnlCpVSh1RSv2ilJph6/OI9kmBJTrjXK21b6s/eUYHZCPbgRuBrUYHIoQ4jjPmnIHAF0AsEAxsBD43MiAhBOCc+aYSWAYEAf7AP4EvlVJuhkbVh0iBJbql+a7PwlZfP6CUerv57+HNd2muVkodUEqVKKXubfVcV6XUn5VSWUqpCqXUFqXUMKXUmuanbG++i/QrpdRcpVRuq9eOUkqtar4js1spdV6rx15XSj2rlPq6+bjJSqmo9t6D1vpZrfUKoNaWn40QwvYcPedorTdqrV/RWpdprRuAx4FYpVSAjT8qIUQPOUG+qdVap2utLYACmrAWWoNs+kGJdkmBJXrTTKx3axcA9ymlRjV//3bgMuBsoD/WuyzVWuvZzY8nNt9Fer/1wZRS7sCXwA/AYOD3wDsnTLNZAvwVayLJBP7eG29MCGFKjpRzZgMFWuvSrr1FIYRJmD7fKKV2YL2J/AXwsta6qJvvVXSRFFiiMz5rvptyRCn1WRde91etdY3WejvW6XiJzd//DfCX5rsrWmu9vZMXGVMBX+ARrXW91nol8BXWRNbi0+Y7xY3AO0BSF+IVQpiDU+ccpVQY8CzWCzEhhLGcNt9orcdiLfIuB9Z24b2JHpK5mKIzLtBaL+/G6wpa/b0aa+IAGAZkdeN4Q4CDzUPeLXKAoZ04pxDCcThtzlFKBWG9Q/2c1vrdbsQkhLAtp803YJ0uCLyrlNqjlEppLghFL5MRLNFdVYBPq69DuvDag0C7a6NOIQ8YppRq/XM7HDjUjWMJIRyLw+ccpZQ/1uLqC621TF8WwrwcPt+0wR2ItNGxRAekwBLdlQIsUUq5K6UmApd04bUvA39TSkUrq7GtFnoX0n4CSMZ6x+aPzeedC5wLvNedN6CU8lBKeWFdAOqulPI6IbEJIcwjBQfOOUqp/sD3wDqt9T1dfb0Qwq5ScOx8M1UpNbP5OsdbKXU31u6lyV09lugeuZgU3fV/WO/QHMa64PK/XXjtv4EPsN7JLQdeAbybH3sAeKN5LvSlrV+kta7HmmzOAkqA54CrtNZp3XwPPwA1wHTgxea/zz7lK4QQRnH0nHMhMAm4Rh2/587wbhxLCNG7HD3feGJd51mKdQTsbGCRk7SgdwhKa210DEIIIYQQQgjhFGQESwghhBBCCCFsRAosIYQQQgghhLARKbCEEEIIIYQQwkakwBJCCCGEEEIIG3G6jYYDAwN1eHi40WEIIZpt2bKlRGsdZHQcvUVyjhDmIflGCGFP7eUcpyuwwsPD2bx5s9FhCCGaKaVyjI6hN0nOEcI8JN8IIeypvZwjUwSFEEIIIYQQwkakwBJCCCGEEEIIGzG0wFJKvaqUKlJK7WrncaWUekoplamU2qGUGm/vGIUQzkHyjRDCniTnCNF3Gb0G63XgGeDNdh4/C4hu/jMFeL75v0J0S0NDA7m5udTW1hoditPx8vIiLCwMd3d3o0Npz+tIvhFC2M/rSM4Rok8ytMDSWq9RSoWf4innA29qrTWwQSk1UCkVqrXOt0+EzqfqaBWFOSUcKTpKfW0DTY1N+PT3xs/fl+DwIPz8fY0OsVfl5ubi5+dHeHg4Simjw3EaWmtKS0vJzc0lIiLC6HDa1FfzjcVi4cC+Yoryj+Dq6kL4yGACBvc3OixhgPIj1exLz6e2poGgkAGMiBqMm7ur0WE5LWfKOVprMnOKKS6tIHJ4ECFBkkNsRWtNxv4iyg5XMTI8iKAAP6NDchpNTRb27i/icHk1sZHBDBrYz27nNnoEqyNDgYOtvs5t/t5xyUcpdR1wHcDw4cPtFpzZaa3J3n2Q5K+2kLohg7TkvRwuPHrK1wwcPIDIxBEkzoknaf4Y4iaPxMXFeZbq1dbWSnHVC5RSBAQEUFxcbHQoPdGpfAOOkXNqquv45K31fP3BRsqKK457LG7sMC5ZOpMZC0bLv4U+YOuGTN5/aTU7NmdjvZa3GuDvw2nnj+dX187Gb4CPgRH2WQ5xjXPgUBkPPf0tqXvzm+OBM+fE84ffLMDH28Pu8TiTfQdKeOipb8jYXwSAi4vinAUJ3HLNPLw8TTsbxCFk7Cvkoae/Zd+BEsD62V505jhuvGo2Hu69X/6YvcDqFK31i8CLABMnTtQdPN3pFR0o5puXVvDTe2vJyyoEYFjsECacnkh4/HCCRwQyKNQfDy93XN1cqa6ooaKskvx9RRzYk0v6pkxe+8u7AAQNC2DupdM5Y9l8RowKM/Jt2YxcUPaOvvS5mj3n7Nqazf/780cU5R1h0swYlt5yGsPCg6ivbyBj5yG++3QLD93+LpNmxnDXPy6h/0C5uHZGNdV1PPPQl6z4KoXA4P5ccf084seNwNvHg/zcMtav3MMnb67jh8+2csdDFzNldqzRIYs2GJlv9h8s4eb/ex+AO69bSOSIINZuyuS9LzaTc6iMJx9YjLeXFFndkbGvkJvvex8vTzfuvuF0RoQF8NP6dD76ZisHDpXx2F8uliKrm3akHeKOv32EXz8v/nzTmQwNHciPP+859tn+vz9fiJtb747em73AOgQMa/V1WPP3RBvSN2Xyzt8/JvmrLWgNE04fy6V3nc/UcycSEOrfpWMdLSln03cprHp/HZ88+Q0f/utLJp01jktuP5dx88f0qYtp0Wc4Rb5Z+XUKj9/3KUGhA3ns9d8wZnz4cY8nTorkoqum8+X7G3nl399x85LneOSlaxgyLMCYgEWvKCup4P6b3yIrLZ8rfjePX/12Dh4e//uVHzd2GPPOTmR/RgGP/eVj7r/5La676ywuunKGgVH3OabOOVXVdfzxH5/g5urCsw8tIaz5OmJs3FDiY4bwf499wd+f+Y6/3XGuXBN00ZHyau76xyf49vPk+b9fRnCgdcrl2LihjI4O5cEnv+aJV1Zyz41nGByp4ykpq+Te//c5Af6+PP3XS49NuUwcFUZsZDD/fP4HnnljNbddO79X4zD73K8vgKuaO+1MBY6acW6y0fbvzOH/zn+Em6f8iV1r0/jV3Rfw1r5nefjbv7DoutO6XFwBDAjsz8Jfz+ahL//Ee4deYOmDS8jcuo+7T3uQPy78K+mbs3rhnfQNrq6uJCUlHfuTnZ1ts2N/9tlnpKamHvv6vvvuY/ny5T0+bmlpKfPmzcPX15ebb765x8czKYfPNz99s51H//wxo5KG8+Q7vzupuGrh6ubKBVdM47HXf0tNVR1/XPYKeQdL7Rus6DUVR6u55zevcnB/Cfc/9WuuvGnBccVVaxExITz+1nXMXBjPi49+y4ev/WznaPs0U+ecJ1/7icKSCv5+9/nHiqsWc6ZE89vLZrLqlwyWr00zKELH9e+XVnC0ooZ//unCY8VVi9NmjeLXF07hqxU7WZO816AIHdejL/xIbV0DD999/knr2c5dOJbFi8bz0Tdb2byjd/ckN7pN+7vAL0CsUipXKXWtUup6pdT1zU/5BtgHZAIvATcaFKopVVfU8J/bX+f68X9k189pLP3bEt7a9yzL/n45wSOCbHaegUEDuOIvF/N29vPc9OQ

|

|||

|

|

"text/plain": [

|

|||

|

|

"<Figure size 864x648 with 9 Axes>"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

"metadata": {

|

|||

|

|

"needs_background": "light"

|

|||

|

|

},

|

|||

|

|

"output_type": "display_data"

|

|||

|

|

}

|

|||

|

|

],

|

|||

|

|

"source": [

|

|||

|

|

"import numpy as np\n",

|

|||

|

|

"import matplotlib.pyplot as plt\n",

|

|||

|

|

"\n",

|

|||

|

|

"# 定义绘制正弦函数的函数\n",

|

|||

|

|

"def plot_sin_functions(num_functions):\n",

|

|||

|

|

" y = np.linspace(0, 10, 1000) # 定义 y 轴范围\n",

|

|||

|

|

" colors = plt.cm.viridis(np.linspace(0, 1, num_functions)) # 生成颜色序列\n",

|

|||

|

|

"\n",

|

|||

|

|

" fig, ays = plt.subplots(3, 3, figsize=(12, 9)) # 创建3y3子图\n",

|

|||

|

|

"\n",

|

|||

|

|

" # 绘制每个正弦函数\n",

|

|||

|

|

" for i, ay in enumerate(ays.flat):\n",

|

|||

|

|

" if i < num_functions:\n",

|

|||

|

|

" frequency = (i + 1) * 0.5 # 通过增加倍数来调整频率\n",

|

|||

|

|

" y = np.sin(frequency * y)\n",

|

|||

|

|

" ay.plot(y, y, label=f'Function {i+1}', color=colors[i])\n",

|

|||

|

|

" ay.set_title(f'Function {i+1}')\n",

|

|||

|

|

" ay.set_ylabel('y')\n",

|

|||

|

|

" ay.set_ylabel('sin(y)')\n",

|

|||

|

|

" ay.legend()\n",

|

|||

|

|

"\n",

|

|||

|

|

" plt.tight_layout()\n",

|

|||

|

|

" plt.show()\n",

|

|||

|

|

"\n",

|

|||

|

|

"# 绘制9个正弦函数\n",

|

|||

|

|

"plot_sin_functions(9)\n"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "5e810dc0-2972-4ddb-b0b1-631a46ec879d",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"如果能够将不同的特征投射到**不同频率的sin和cos函数上**,就可以让每个特征都投射到一个独特的维度上,各类不同的信息维度共同构成一个解构位置信息的空间,就能够形成对位置信息的深度解读。\n",

|

|||

|

|

"\n",

|

|||

|

|

"**<center>正余弦编码的意义②:通过调节频率,我们可以得到多种多样的sin和cos函数,<br><br>从而可以将位置信息投射到每个维度都各具特色、各不相同的高维空间,以形成对位置信息的更好的表示**"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "eb4fcbb1-ee09-4810-8ac6-a5c81f79c0e2",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"- 正弦位置编码(Sinusoidal Positional Encoding)\n",

|

|||

|

|

"$$PE_{(pos, 2i)} = \\sin \\left( \\frac{pos}{10000^{\\frac{2i}{d_{\\text{model}}}}} \\right) $$\n",

|

|||

|

|

"\n",

|

|||

|

|

"- 余弦位置编码(Cosine Positional Encoding)\n",

|

|||

|

|

"$$ PE_{(pos, 2i+1)} = \\cos \\left( \\frac{pos}{10000^{\\frac{2i}{d_{\\text{model}}}}} \\right) $$"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"id": "180b8539-cea9-4a11-adb2-909321b4358d",

|

|||

|

|

"metadata": {},

|

|||

|

|

"source": [

|

|||

|

|

"接下来的问题就是如何赋予sin和cos函数不同的频率了——在sin和cos函数的自变量上乘以不同的值,就可以获得不同频率的sin和cos函数。\n",

|

|||

|

|

"\n",

|

|||

|

|

"$$y = sin(frequency * y)$$\n",

|

|||

|

|

"\n",

|

|||

|

|

"在位置编码的场景下,我们的自变量是样本的位置pos,因此特征的位置(2i和2i+1)就可以被用来创造不同的频率。在这里,我们对pos这个数字进行了scaling(压缩)的行为。具体地说,我们使用了$10000^{\\frac{2i}{d_{\\text{model}}}}$来作为我们缩放的因子,将它作为除数放在pos的下方。但这其实是在pos的基础上乘以$\\frac{1}{10000^{\\frac{2i}{d_{\\text{model}}}}}$这个频率的行为。因此,引入特征位置本身来进行缩放可以带来不同的频率,帮助我们将位置信息pos投射不同频率的三角函数上,确保不同位置(pos)在不同的特征维度(2i和2i+1)上有不同的编码值。\n",

|

|||

|

|

"\n",

|

|||

|

|

"那下一个问题是,这些正余弦函数的频率是随机的吗?我们应该如何控制它呢?正余弦编码最为巧妙的地方来了——通过让位置信息pos乘以$\\frac{1}{10000^{\\frac{2i}{d_{\\text{model}}}}}$这个频率,**特征编号比较小的特征会得到大频率,会被投射到高频率的正弦函数上,而特征编号较大的特征会得到小频率,会被投射到低频率的正弦函数上**👇"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "code",

|

|||

|

|

"execution_count": 61,

|

|||

|

|

"id": "d7e3e910-b513-454f-8556-5f19ab50105f",

|

|||

|

|

"metadata": {},

|

|||

|

|

"outputs": [

|

|||

|

|

{

|

|||

|

|

"data": {

|

|||

|

|